I’m having a problem with SignalR in my .NET app.

There are two steps for connecting the frontend to the socket:

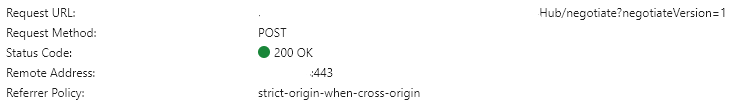

1º Send a HTTP POST request to negotiate(as I’m not skipping the negotiation) with the hub (get the token and the available transport types);

2º Start the websocket connection;

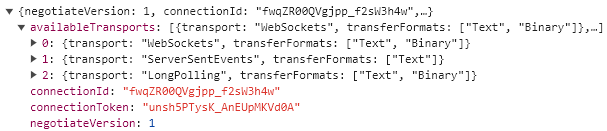

My problem is, I have the application hosted in the cloud (in containers), with load balancing. Every time that the backend application (hosting SignalR) has more than one pod(instance), it doesn’t work properly. The first request works properly, returning the token and transport types. The second request, throw me a 404 with response "No Connection with that ID";

My guess is: as it has a loading balancing, the first step of the connection, the negotiation, is sent to server A. The second step, where it tries to make the connection to the socket, is sent to server B, that has not the token and the id of the connection and cannot validate it.

On local server or running the backend server in a single pod, it works fine.

I tried to implement redis as a backplane, but I don’t think that it fix this error.

2

Answers

if you are using sticky sessions, you need to use the actual ARRAffinity header sent during negotiate from the signalr server.

Initial request to negotiate/get transport types:

Server responds with ARRAffinity headers from all servers the request has executed on. This will include the actual server where signalr connection was created.

The response should look similar to

ARRAffinity: abc1 – domain – signalr server

ARRAffinity: def2 – domain – API gateway.

In the next request made to establish the websocket, set ARRAffinity as ABC1, not def2. This will fwd the request to the server where the connection id was actually created.

I had the same problem. You need to set session affinity cookie. For my case, here is my Arch

I fixed it by adding session affinity cookie in ingress nginx, for example:

The last two line about cookie is for local development.

The root cause is the first negotiate request(POST) and the second websocket(GET) are handled by different pod, the token in the second request is issued by the first pod, but the second pod has NOT FOUND that token record, so return 404.