I am trying to move a CSV file from a GCS bucket to an AWS S3 bucket.

Considerations –

- The CSV file is dynamically generated, so schema unknown

- Filename should be same once transferred to the S3 bucket

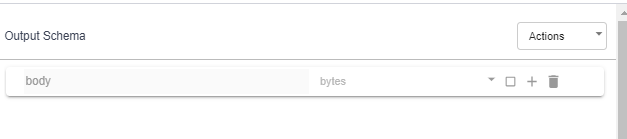

In both cases CDF is failing. When providing schema column name as body, type byte it fails with exception ‘Illegal base64 character 5f’.

File name is changed to part* when the file lands in S3 bucket, when specific schema is given. This should be a simple task for datafusion. Is there any way to achieve it.

2

Answers

Referred How to write a file or data to an S3 object using boto3 using cloud functions for a solution. CDF does not provide functionality for effective S3 transfer from GCS.

The illegal base64 error might be caused by wrong encoding of the input csv file.

As for the second issue, files written to AWS S3 bucket are auto-named by Hadoop with the format ‘part-r-taskNumber’. You can try overriding this by setting the file system properties in the plugin to include:

See documentation here