I have read this AWS blog post:

https://aws.amazon.com/blogs/database/part-2-scaling-dynamodb-how-partitions-hot-keys-and-split-for-heat-impact-performance/

I am trying to reproduce the DynamoDB partition split.

My setup is the following:

- 1 DynamoDB table on-demand containing big items with the same partition key for all

I am running this command on 50 different EC2 instances:

watch -n 0.1 "aws dynamodb query –table-name "TABLE_NAME" –consistent-read

–key-condition-expression ‘PK = :PK’ –expression-attribute-values ‘{":PK":{"S":"uniquepartition"}}’"

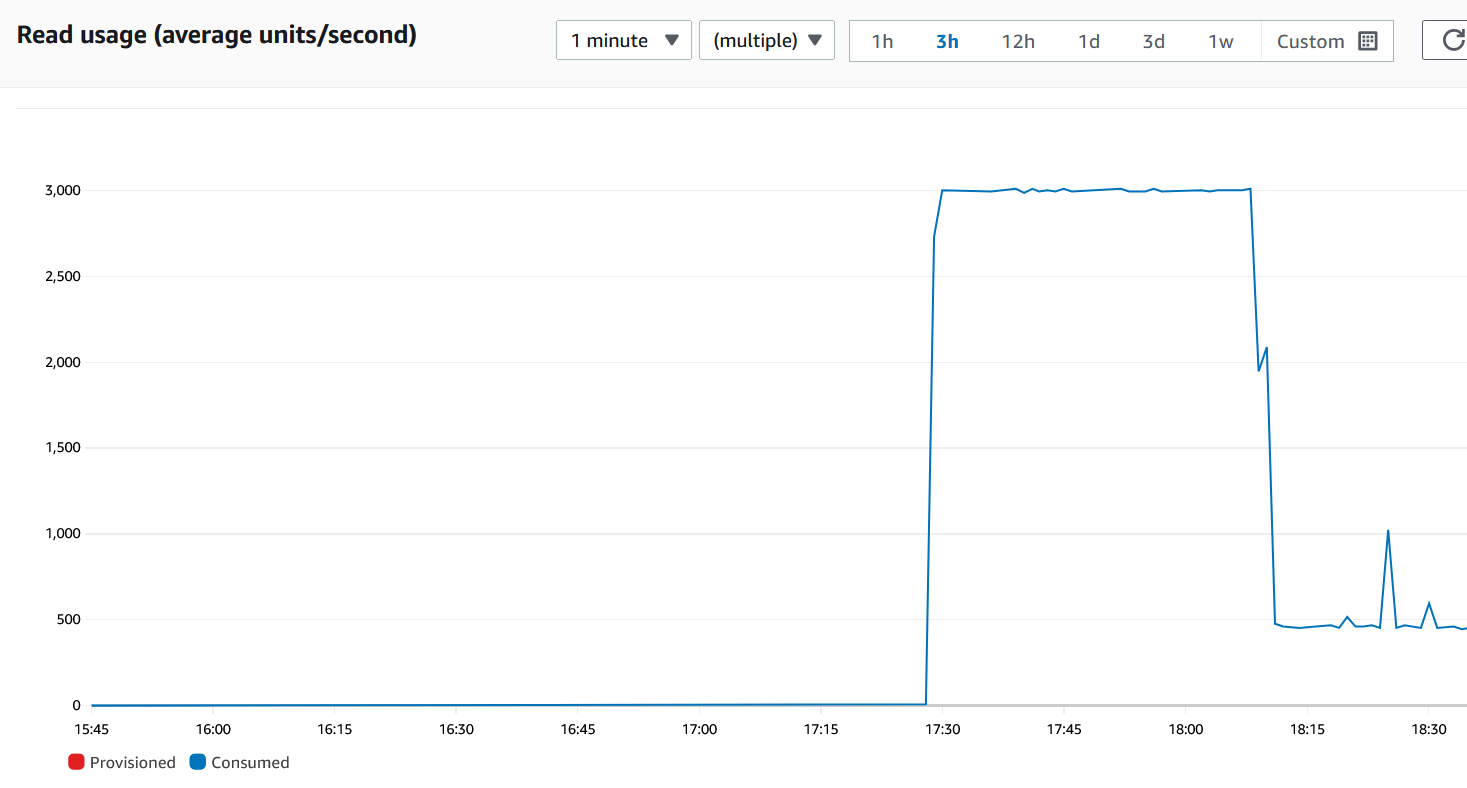

As you can see, it hits the 3000 RCU of the partition but then doesn’t split (and the Read Usage even goes down after a while). I don’t know why…

(I have disabled retries on the CLI)

Any idea ?

2

Answers

Means that only the leader node can answer the question.. per your linked article..

Thus DDB can’t split the hot partition…

Use

--no-consistent-readinstead…I don’t fully grasp your description of your test case, but my assumption is you are just Querying with the same partition key value constantly with the following command:

Since you do not have a sort key,

uniquepartitionis targetting a single item, and a single items maximum throughput is 3000 RCU for strongly consistent reads. DynamoDB cannot split a single item, so the limit is a hard limit which cannot be avoided.If for example you had a single partition that held many items all with unique keys and your read access pattern was distributed across the keys evenly, then DynamoDB would begin to split for heat, and could potentially continue to split until each item had its own dedicated partition.

So in summary, you seem to be hitting a single item limit of 3000 RCU as expected.

Again this is based on assumption, let me know if I understood you correctly.