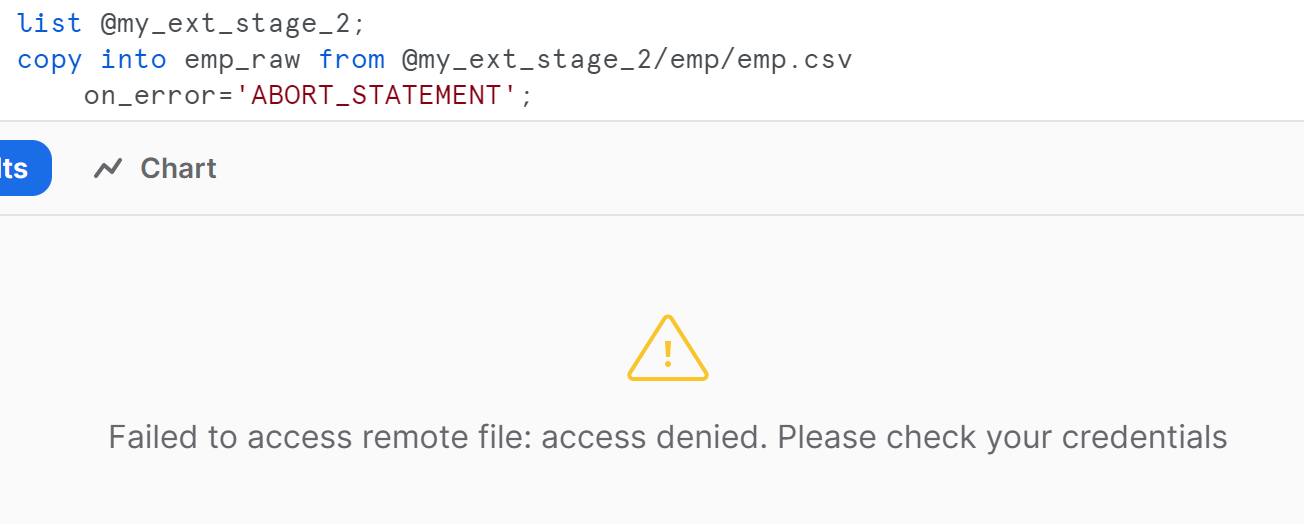

I am trying to load a simple CSV file from s3 to snowflake using aws external stage. I am able to list the files using list @stage_name command but get the access denied error while loading the file via copy into sql. I checked the encryption which is default SSE S3 for the file and as per snowflake documentation snowflake_document no additional encryption setting is required for SSE S3 type of encryption.

Below is the command copy command i am using

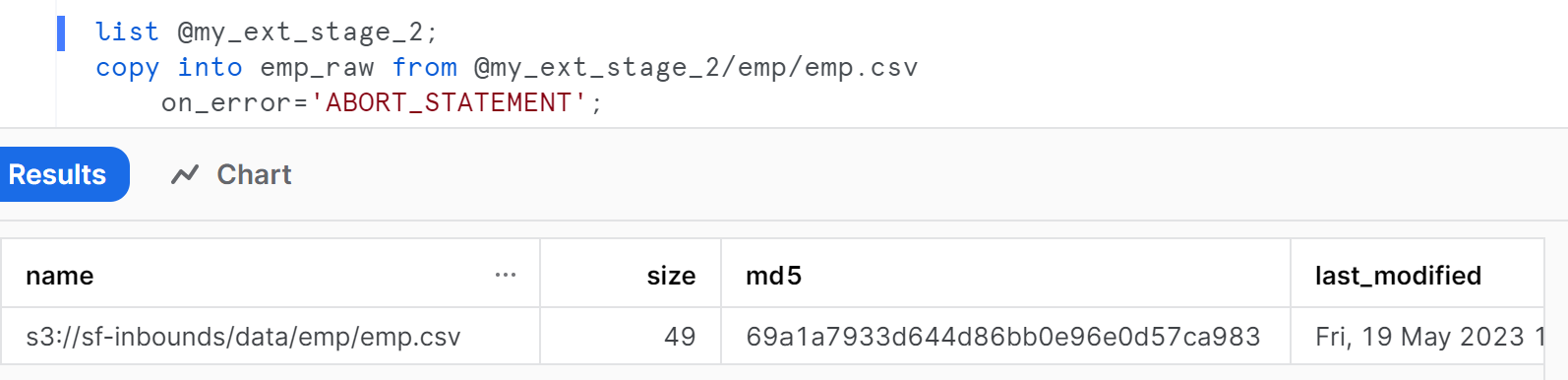

list @my_ext_stage_2;

copy into emp_raw from @my_ext_stage_2/emp/emp.csv

on_error='ABORT_STATEMENT';

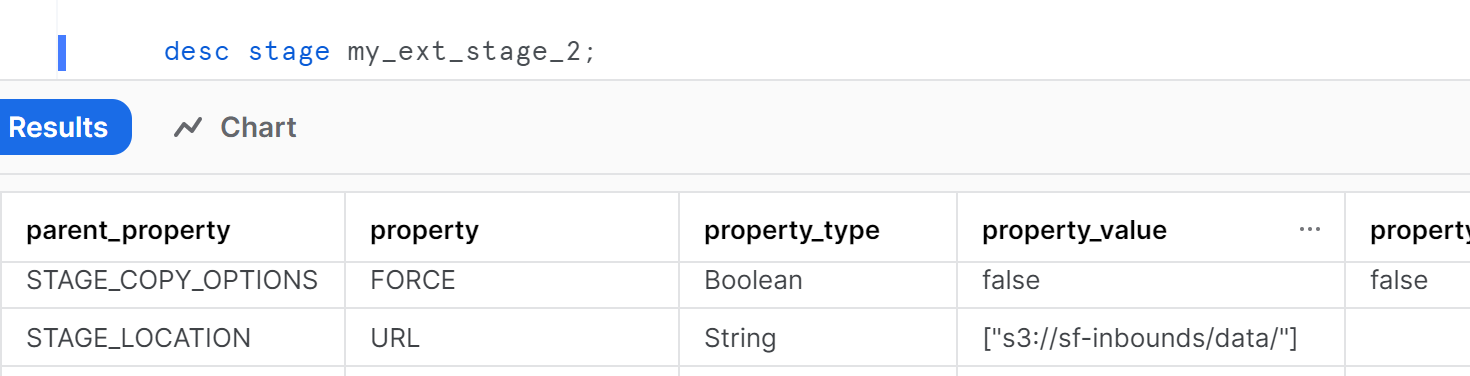

desc stage my_ext_stage_2;

my access policy in aws is below

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "ListObjectsInBucket",

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::my-bucket-name"

]

},

{

"Sid": "AllObjectActions",

"Effect": "Allow",

"Action": "s3:*Object",

"Resource": [

"arn:aws:s3:::my-bucket-name/*"

]

}

]

}

Thanks for helping !

2

Answers

as expected the issues was with the policy permissions only. Some other permissions than *object and listBucket were required.

}

I was able to load data from a file into snowflake table and unload into s3 directory from table as well.

Snowflake requires the following permissions on an S3 bucket and folder to be able to access files in the folder (and sub-folders):

As part of your policy the

s3:*Objectaction uses a wildcard as part of the action name. TheAllObjectActionsstatement allows theGetObject,DeleteObject,PutObject, and any other Amazon S3 action that ends with the word"Object". This means for example thats3:GetBucketLocationis not allowed, therefore an example of a read-only policy should look like this example:For more information have a look here.