I want to index a set of documents (actually only 5) from PDF.

All I want to do is use the TextSplitSkill followed by AzureOpenAIEmbeddingSkill. Here is my Skillset template:

{

"name": "{{ skillSetName }}",

"description": "Skillset to chunk documents and generate embeddings",

"skills": [

{

"@odata.type": "#Microsoft.Skills.Text.SplitSkill",

"textSplitMode": "pages",

"defaultLanguageCode": "de",

"maximumPageLength": 1000,

"pageOverlapLength": 100,

"inputs": [

{

"name": "text",

"source": "/document/content"

}

],

"outputs": [

{

"name": "textItems",

"targetName": "pages"

}

]

},

{

"@odata.type": "#Microsoft.Skills.Text.AzureOpenAIEmbeddingSkill",

"name": "OpenAIEmbeddings",

"description": "Convert chunk content to embeddings",

"resourceUri": "{{ embeddingEndpoint }}",

"deploymentId": "{{ embeddingDeployment }}",

"context": "/document/pages/*",

"inputs": [

{

"name": "text",

"source": "/document/pages/*"

}

],

"outputs": [

{

"name": "embedding"

}

]

}

],

"indexProjections": {

"selectors": [

{

"targetIndexName": "{{ targetIndexName }}",

"parentKeyFieldName": "parent_id",

"sourceContext": "/document/pages/*",

"mappings": [

{

"name": "content",

"source": "/document/pages/*"

},

{

"name": "content_vector",

"source": "/document/pages/*/embedding"

}

]

}

],

"parameters": {

"projectionMode": "skipIndexingParentDocuments"

}

}

}

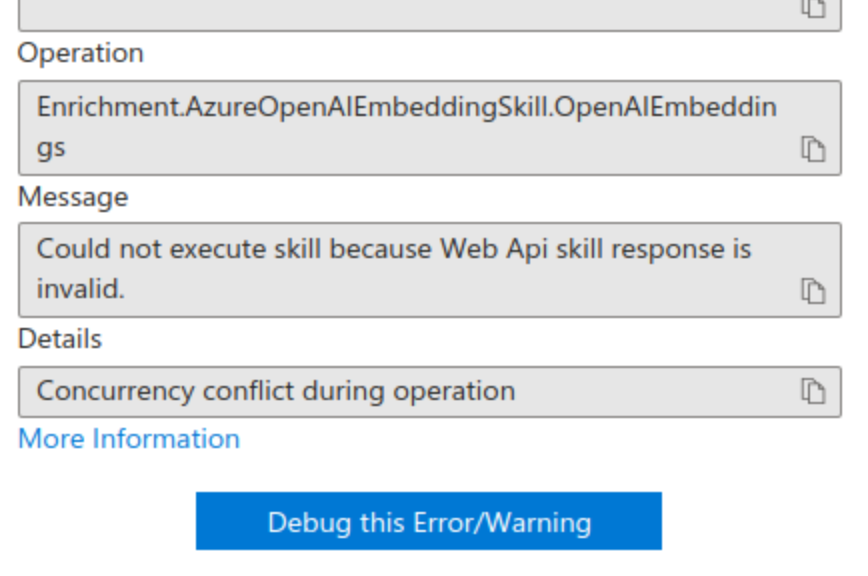

However, I get the

Error Message: Could not execute skill because Web Api skill response is invalid.

Details: Concurrency conflict during operation

for my longest PDFs (30 pages). I read that Azure OpenAI Embedding only supports a batch-size of 16 for embeddings.

I played around with one document und splitting it into chunks of 300 chars and setting the maximumPagesToTake parameter to 16 but that ended in the same error.

I’m out of ideas and as the AzureOpenAIEmbeddingSkill is not open-source I can’t see if they are wrapping the calls to AOI around retries / splitting them in mini-batches…

Update: Here a screenshot of the Indexer-Error:

Can somebody help?

Question posted in

Question posted in

2

Answers

I ended up comparing my JSON files for raw REST-API calls to the ones that are created when you use the Portal.

I finally fixed it by copying some variables from the automatically-created one to mine.

I think the key parameter that must be set is

maxPagesToTake=0. By setting it to zero, it automatically takes all. I would have thought that would be the default behaviour, if I ddin't set it at all (but obviously my expectation was wrong).The documentation should be updated in order to reflect the meaning of this parameter and also that it actually is a REQUIRED parameter.

I tried your JSON template and it’s working for me. I also added the target name.

Output:

If donot find Web API skills in the json

If you want to use a Custom Web API skill in Azure AI Search, refer to this link.

You can check the required template in the Azure portal.

I have added indexes using documentation. The

AnalyzeDocumentOptions.Pages Propertycan accept a page list or a page number as inputs.Integrate the

AnalyzeDocumentOptions.Pagesproperty into the existing code and limit the analysis to only the first 5 pages.I tried a sample example from Azure Samples search with dotnet using git and SO.