I’m trying to identify/get the most recently added files and accordingly, transfer the information to an Azure SQL Database table. The problem is that the new files are added to subfolders and I don’t know how to search within them. Is it possible to search within the subfolders of the container itself?

If this process cannot be achieved with ADF, is there another configuration that I could use?

2

Answers

And this is the problem that occurs at the source activity of the data flow.:

As your folder structure is nested, Get meta data activity won’t recognize the wild card path for the file names. So, it requires nested pipelines based on the folder level. If the folder level is 2, it requires parent -> pipeline in a loop.

To avoid this nested pipeline, you can use dataflow. First Get all the file paths list in the folder structure using dataflow. Then pass this list to ForEach with below logic.

Give your source dataset path till the container like below.

Give this dataset to the dataflow, and in dataflow source settings, use the wild card path

folder/*/*and add the columnfilepath.If you want, you can also add the Start time(

subDays(currentUTC(),1) and End time(currentUTC()) filters for the files but make sure to change the expression as per your time zone.Here whetever might be the file structure, it will merge all the rows of the files and adds a column which has the file path of that particular row like below.

Now, use aggregate on this. In the Group by, use column

filepathand add any sample columncountfor the aggregate with expressioncount(<any column name>).This will give the result like below.

Then, use select transformation Rule based transformation, to select only the

filepathcolumn like below.In the dataflow sink, use sink cache and select Write to activity output.

In pipeline, take the dataflow activity and give the below configurations.

If you execute the dataflow activity, it will give the file paths array output like below.

Give the below expression to the ForEach.

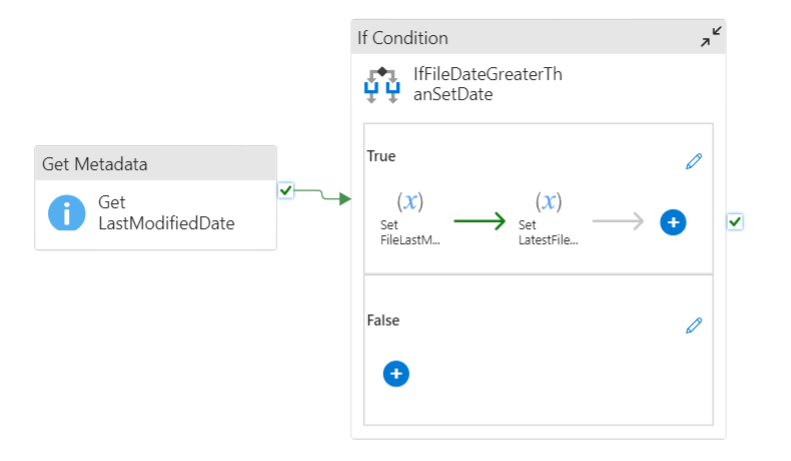

Use the above logic to get the latest file now with

@item().filepathin every iteration.