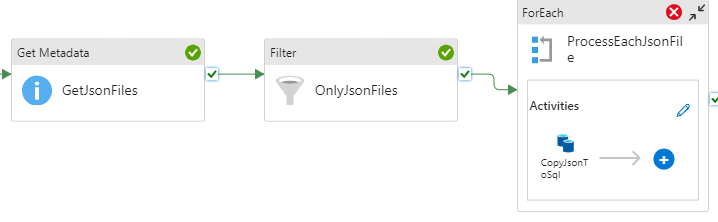

I have a ForEachFile activity that get the files from GetMetaData.The files is json.

Within this ForEachFile activity I have a copy that should insert the contents into a sql server database table and in addition add the current filename as an extra column to the database table.

So I have google and find several example where they use the feature additional columns. So I thought that this should be easy.

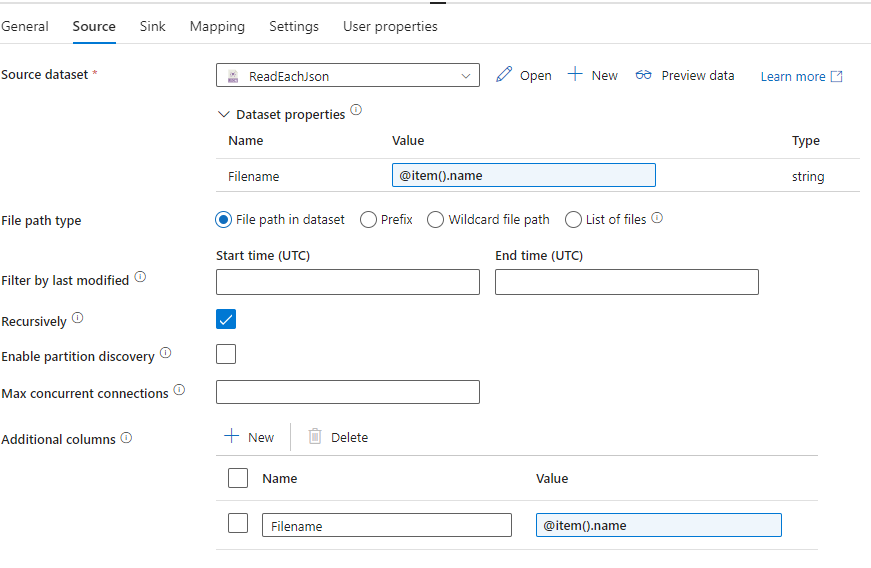

So in the additional columns in the Source I add a dynamic column with Filename and value as @item.name because each iteration in the ForEachFile loop will have @item.name as the filename.Here is a screen shot for ther Source

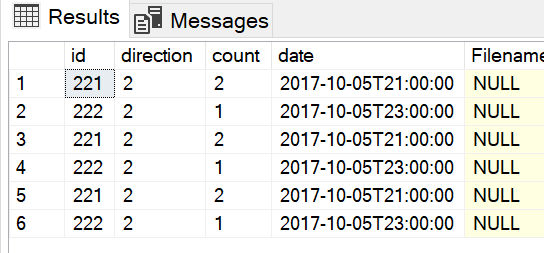

All the values in the json file is inserted into the database but the filename is empty.

If I create the table first and then run the pipeline and can see that the Filename column is null. If I let sink create the table I can see that no Filename column is created.

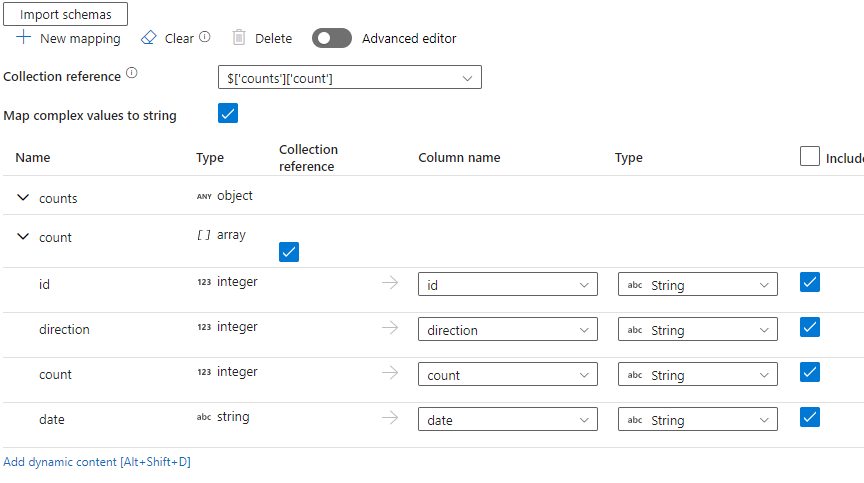

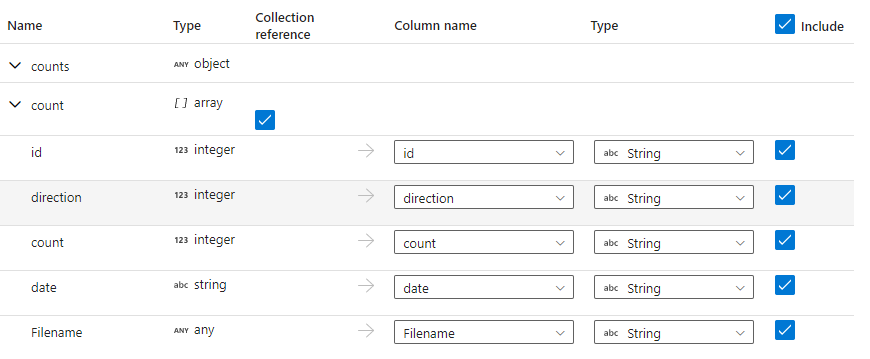

When I have added this to the mapping

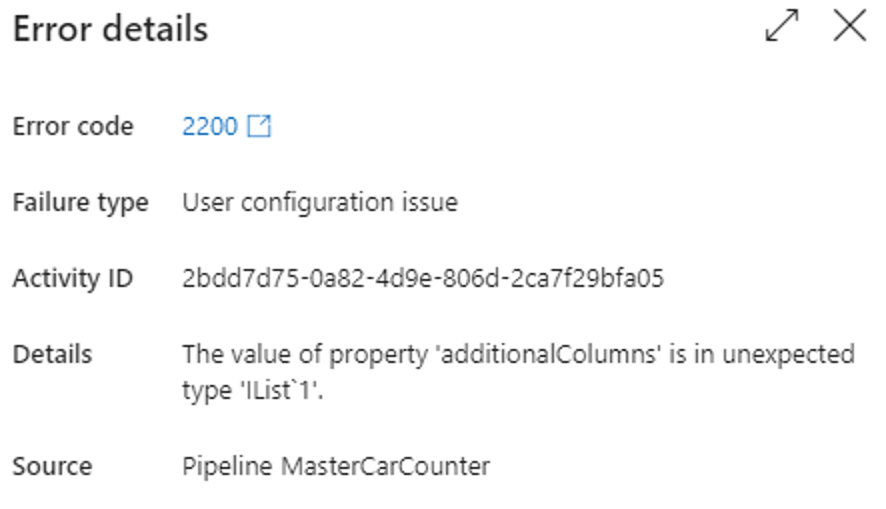

Here is the input to activity copyJsonToSql. Note I have three of these because I have three files so the value of "additionalColumns" is different

{

"source": {

"type": "JsonSource",

"additionalColumns": "516e59b7-13cd-46e9-a0c7-1d04e16b4b00.json",

"storeSettings": {

"type": "AzureBlobStorageReadSettings",

"recursive": true,

"enablePartitionDiscovery": false

},

"formatSettings": {

"type": "JsonReadSettings"

}

},

"sink": {

"type": "SqlServerSink",

"writeBehavior": "insert",

"sqlWriterUseTableLock": false,

"tableOption": "autoCreate"

},

"enableStaging": false,

"translator": {

"type": "TabularTranslator",

"mappings": [

{

"source": {

"path": "[‘id’]"

},

"sink": {

"name": "id",

"type": "String"

}

},

{

"source": {

"path": "[‘direction’]"

},

"sink": {

"name": "direction",

"type": "String"

}

},

{

"source": {

"path": "[‘count’]"

},

"sink": {

"name": "count",

"type": "String"

}

},

{

"source": {

"path": "[‘date’]"

},

"sink": {

"name": "date",

"type": "String"

}

},

{

"source": {

"path": "[‘Filename’]"

},

"sink": {

"name": "Filename",

"type": "String"

}

}

],

"collectionReference": "$[‘counts’][‘count’]",

"mapComplexValuesToString": true

}

}

Here I have added the relevant activity in the pipeline

Now I get null as the filename in spite having added Filename as an addition columns as @item().name. I also used Add dynamic content and here I choose processeachJsonFile current item.

New screen shot

Many thanks in advance

Tony Johansson

2

Answers

I manage to solve it as I indented in the first place. In the foreach activity I have a copy called CopyJsonToSql and after that a stored procedure. In the CopyJsonToSql I insert the data from the Json files into the db table and the column called filename will be null because there is no data in the jsonfile called Filename. In the stored procedure SaveFilenameToSql I pass the filename as @item().name. The stored procedure update ther database table with correct filename. The stored procedure look