I know there are few ways to copy the containers from one subscription storage account to another storage account.

- using azcopy tool but I do not have access to SAS to generate and use for above activity

- Through Storage explorer, can’t copy manually because there are 100’s of containers.

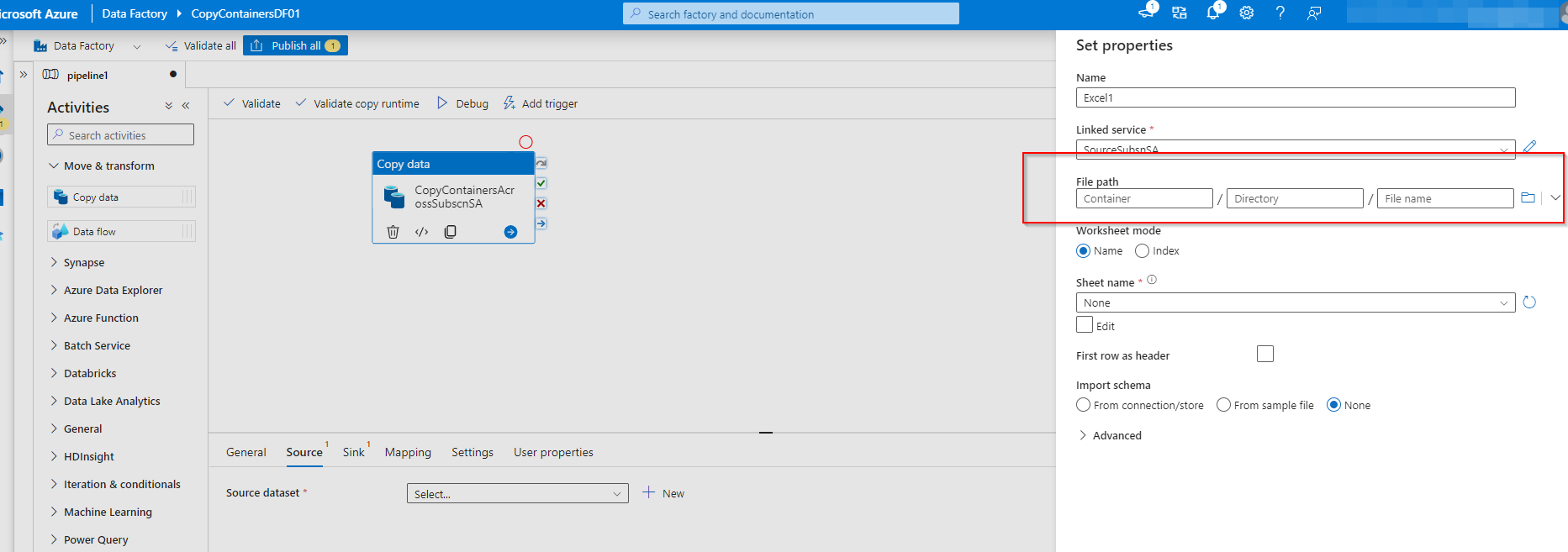

- Trying through ADF, can’t find the multiple containers option in the browse option of File path:

Could anyone help me on how to select multiple containers or select all containers in a storage account and copy to another subscription storage account using ADF or any other technique?

Note:

- I found similar question in SO but same UI is not in my ADF pipeline and there is no complete steps to do that process.

- The files are in excel format inside the contianer

2

Answers

If you have multiple containers to copy, you can add multiple Copy activities or utilize dynamic content and parameters to iterate through the containers.

Define pipeline parameters:

Define pipeline parameters that will hold the information about the source and destination containers. For example, you can create parameters like SourceContainerName and DestinationContainerName.

Use dynamic content in Copy activity:

In the Copy activity, specify the source and destination containers using dynamic content referencing the pipeline parameters. For example, you can use

@pipeline().parameters.SourceContainerNamefor the source container and@pipeline().parameters.DestinationContainerNamefor the destination container.Iterate through containers:

Add a ForEach activity to the pipeline. Configure the ForEach activity to iterate over a list of container names or any other suitable mechanism for obtaining the container names dynamically. For example, you can use an array variable or retrieve the container names from a data source like a SQL table.

Set item in parameters:

Inside the ForEach activity, set the value of the SourceContainerName and DestinationContainerName parameters for each iteration. You can set the parameter values using dynamic content, such as

@item()or@{item().ContainerName}.The item() function oritem().ContainerNamerepresents the current container being iterated.There might no direct way to select all the containers in azure data factory for azure blob storage. If you have all the container names, you can loop through them to copy each container to your destination.

To get the list of container, you can use the rest API as shown in this Microsoft document

Use web activity to call the rest API. Now, loop through the container names from the response. Inside for each loop, use a copy data activity to copy the containers to destination storage account.

The source dataset (binary dataset) of the copy data activity is as shown in the below image:

preserve hierarchyto copy the container as is (I have taken parameter as an example here).