Background

I’m working with an ASP.NET Core 9 Web API that takes a (potentially large) file upload from one of our client apps, streams it to a temporary file on the server, does some processing, and then uploads a re-packaged version of the file to blob storage and sends some metadata about it to a database. These are all in Azure (Azure Container Apps, Azure Blob Storage, Azure SQL DB). The request is Content-Type: multipart/form-data, with a single section for the file

Content-Disposition: form-data; name=""; filename="<some_file_name>"

Observation

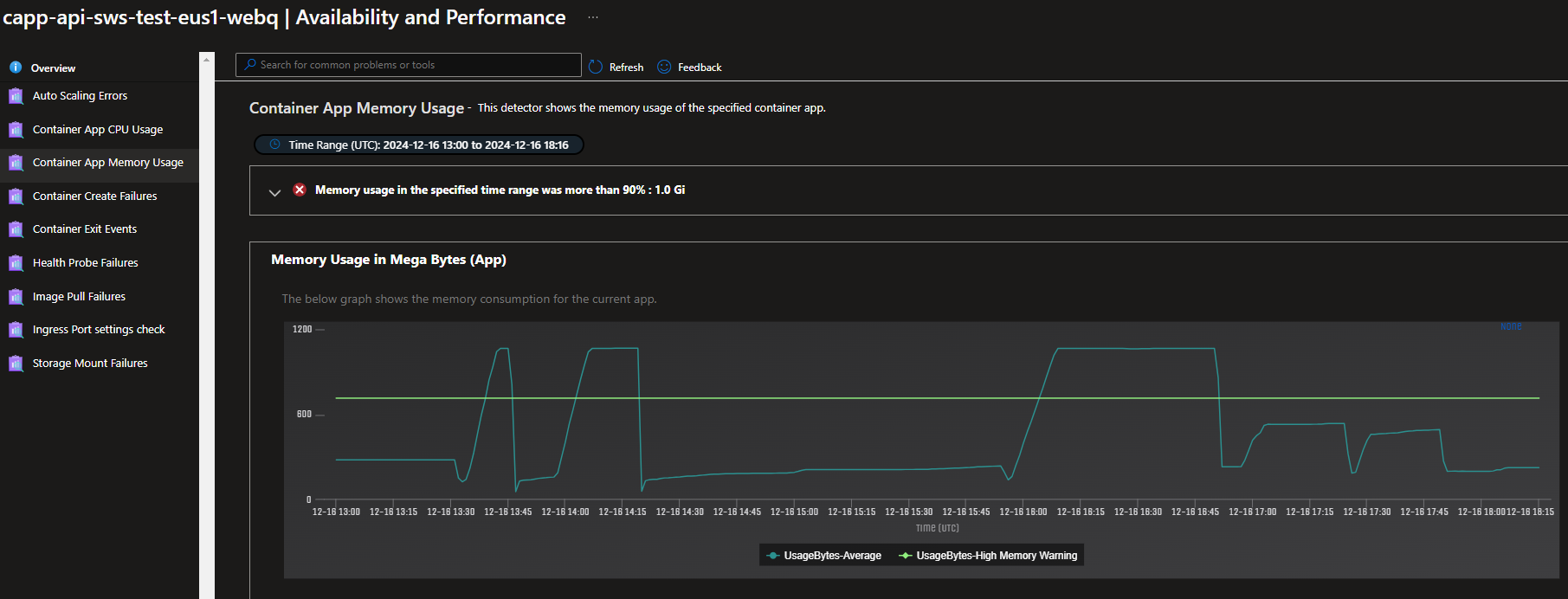

The problem I observe is that the memory usage of the container roughly follows the size of the file being uploaded, causing the container to run out of memory (see screenshot). I was under the impression that streaming the upload directly to file storage should avoid using more than what’s needed for the stream to buffer.

Attempted Solutions

The code mostly follows the example from Upload files in ASP.NET Core, except that 1) there is less case-checking here to keep it simple for testing, and 2) I am constrained to dealing with the stream of the file upload, since the real code will pass along the stream to a client’s library, which will inflate it, process it, etc. This code causes the observed memory problem.

/// <summary>

/// Adds a new Document

/// </summary>

[HttpPost("test", Name = nameof(AddDocumentAsync))]

[DisableFormValueModelBinding]

[DisableRequestSizeLimit]

[ProducesResponseType(StatusCodes.Status201Created)]

[ProducesResponseType(StatusCodes.Status400BadRequest)]

public async Task<ActionResult> AddDocumentAsync()

{

if ( !HttpContext.Request.HasFormContentType )

return BadRequest("No file uploaded.");

string boundary = HttpContext.Request.GetMultipartBoundary();

if ( string.IsNullOrEmpty(boundary) )

return BadRequest("Invalid multipart form-data request.");

MultipartReader multipartReader = new MultipartReader(boundary, HttpContext.Request.Body);

MultipartSection? section = await multipartReader.ReadNextSectionAsync();

if ( section == null )

return BadRequest("No file found in request body.");

FileMultipartSection? fileSection = section.AsFileSection();

if ( fileSection?.FileStream == null )

return BadRequest("Invalid file.");

string tempDirectory = Path.GetTempPath();

string tmpPath = Path.Combine(tempDirectory, Path.GetRandomFileName());

using ( FileStream fs = new FileStream(tmpPath, FileMode.Create) )

await fileSection.FileStream.CopyToAsync(fs);

return Created();

}

I observed the file growing in /tmp, but, unfortunately, the memory usage grew at roughly the same rate.

If I change the destination so the file is streamed from the fileSection.FileStream to blob storage instead of to a local file, I do not observe the memory issues.

I also tried using a minimal API with model binding for IFormFile. I saw from here that, by default, if the file is over 64k, it will be buffered to disk, which is what I would want. I noticed the file growing in /tmp, but unfortunately the memory usage grew at the same rate with this solution as well.

I also tried mounting a storage volume for the container, since I wondered if the container was using memory due to the absence of a mounted storage volume. I mounted an Azure Files instance at /blah and changed the destination for the temporary file from /tmp to /blah. I noticed the file correctly was streamed into the Azure Files storage instance, but the memory issue was still observed in this case as well, just like in the others.

Finally, I attempted this same code (the snippet posted above) in an Azure Web Services app and did not observe the memory increase problem. Similarly, I ran the application locally and did not observe my system or process memory increase in the way it did in the Azure Container App.

UPDATE: In response to comments, I also tried downloading a file from blob storage to the container app. This also causes the memory usage of the container to increase according to the size of the file being downloaded. The code snippet below was used.

[HttpGet("test", Name = nameof(TestDocumentAsync))]

[ProducesResponseType(StatusCodes.Status200OK)]

public async Task<ActionResult> TestDocumentAsync()

{

string tempDirectory = Path.GetTempPath();

string tmpPath = Path.Combine(tempDirectory, Path.GetRandomFileName());

BlobClient blobClient = _blobContainerClient.GetBlobClient("c1f04a61-5ec3-43a8-b7ad-de51ae5185bb.tmp");

using ( FileStream fs = new FileStream(tmpPath, FileMode.Create) )

await blobClient.DownloadToAsync(fs);

return Ok();

}

Question

I assume I am misunderstanding or mis-using something here. What is the proper way to stream a large (1GB to ?GB) multipart/form-data file upload to temporary storage for processing and subsequent deletion when dealing with Azure Container Apps and ASP.NET? Or how can the memory usage be explained, even with a simple download from blob storage?

Question posted in

Question posted in

2

Answers

One thing you could try is to Flush the Stream every time the buffer is written to disk.

Thanks @Magnus As Magnus suggested streaming directly to Azure Blob Storage and saving to temporary local storage with chunked processing is good approach but here you wanted to open the SQLite connection to the file.

You can’t directly open an SQLite connection to a file stored in Azure Blob Storage as if it were a local file.

The below controller handles the large file uploads and streams them directly to a temporary file.

Complete code: