I have a Spark application and I want to access an Azure Blob container by writing the event log into the blob container.

I want to authenticate using a SAS token. The SAS token generated by the Azure portal works fine. However, the one generated by the C# client does not work. I dont know what’s the difference between these two SAS token.

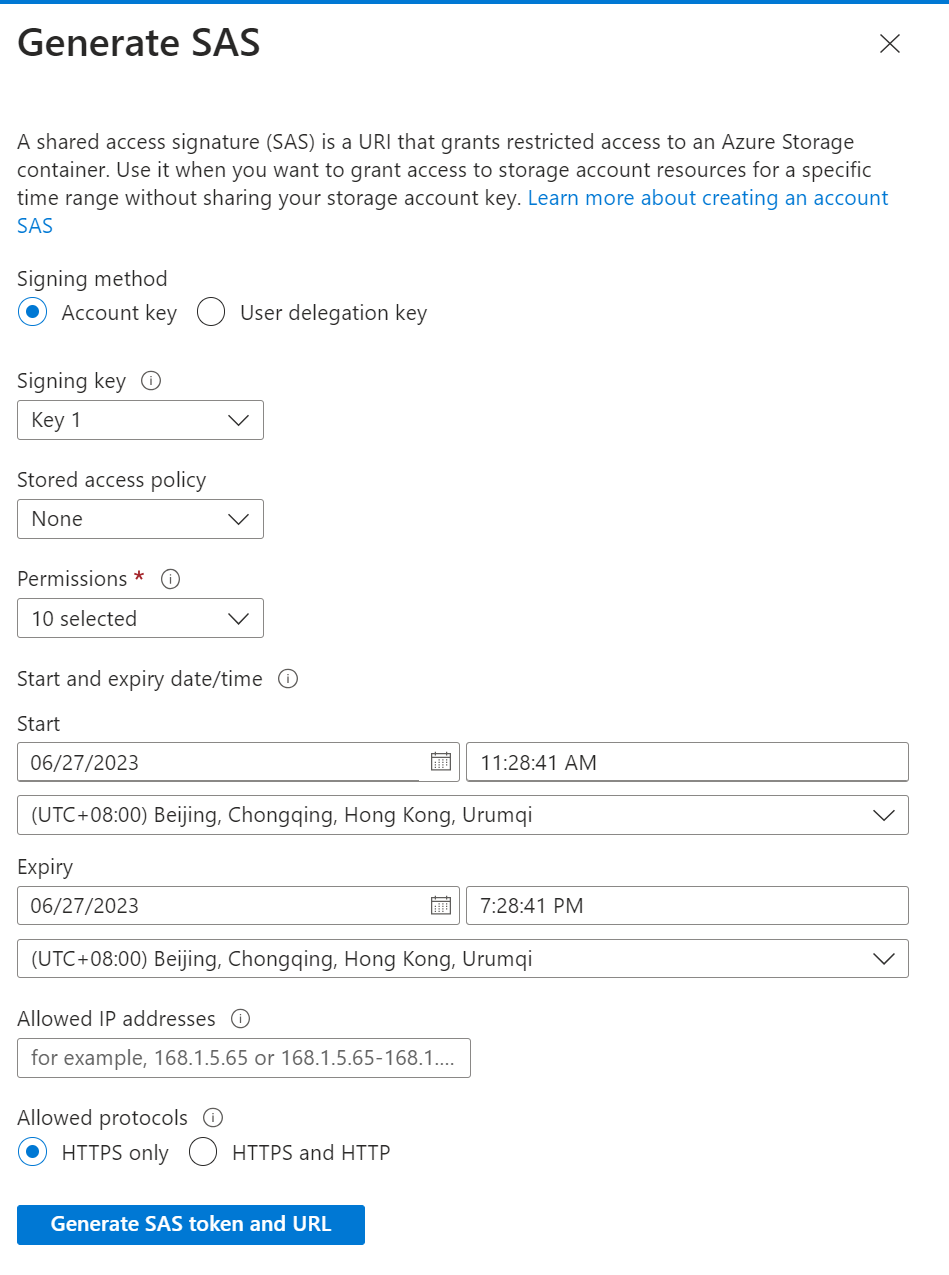

This is how I generate the SAS token in Azure portal

This is my spark conf

spark.eventLog.dir: "abfss://[email protected]/log"

spark.hadoop.fs.azure.account.auth.type.lydevstorage0.dfs.core.windows.net: "SAS"

spark.hadoop.fs.azure.sas.fixed.token.lydevstorage0.dfs.core.windows.net: ""

spark.hadoop.fs.azure.sas.token.provider.type.lydevstorage0.dfs.core.windows.net: "org.apache.hadoop.fs.azurebfs.sas.FixedSASTokenProvider"

This is C# code:

BlobSasBuilder blobSasBuilder = new BlobSasBuilder()

{

StartsOn = DateTimeOffset.UtcNow.AddDays(-1),

ExpiresOn = DateTimeOffset.UtcNow.AddDays(1),

Protocol = SasProtocol.HttpsAndHttp,

BlobContainerName = "sparkevent",

Resource = "b" // I also tried "c"

};

blobSasBuilder.SetPermissions(BlobContainerSasPermissions.All);

string sasToken2 = blobSasBuilder.ToSasQueryParameters(new StorageSharedKeyCredential("lydevstorage0", <access key>)).ToString();

The error is

Exception in thread "main" java.nio.file.AccessDeniedException: Operation failed: "Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature.", 403, HEAD, https://lydevstorage0.dfs.core.windows.net/sparkevent/?upn=false&action=getAccessControl&ti

meout=90&sv=2021-02-12&spr=https,http&st=2023-06-26T03:33:27Z&se=2023-06-28T03:33:27Z&sr=c&sp=racwdxlti&sig=XXXXX

at org.apache.hadoop.fs.azurebfs.AzureBlobFileSystem.checkException(AzureBlobFileSystem.java:1384)

at org.apache.hadoop.fs.azurebfs.AzureBlobFileSystem.getFileStatus(AzureBlobFileSystem.java:611)

at org.apache.hadoop.fs.azurebfs.AzureBlobFileSystem.getFileStatus(AzureBlobFileSystem.java:599)

at org.apache.spark.deploy.history.EventLogFileWriter.requireLogBaseDirAsDirectory(EventLogFileWriters.scala:77)

at org.apache.spark.deploy.history.SingleEventLogFileWriter.start(EventLogFileWriters.scala:221)

at org.apache.spark.scheduler.EventLoggingListener.start(EventLoggingListener.scala:83)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:612)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2704)

at org.apache.spark.sql.SparkSession$Builder.$anonfun$getOrCreate$2(SparkSession.scala:953)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:947)

at org.apache.spark.examples.SparkPi$.main(SparkPi.scala:30)

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: Operation failed: "Server failed to authenticate the request. Make sure the value of Authorization header is formed correctly including the signature.", 403, HEAD, https://lydevstorage0.dfs.core.windows.net/sparkevent/?upn=false&action=getAccessControl&timeout=90&sv=2021-02-12&spr=https,http&st=2023-06-26T0

3:33:27Z&se=2023-06-28T03:33:27Z&sr=c&sp=racwdxlti&sig=XXXXX

at org.apache.hadoop.fs.azurebfs.services.AbfsRestOperation.completeExecute(AbfsRestOperation.java:231)

at org.apache.hadoop.fs.azurebfs.services.AbfsRestOperation.lambda$execute$0(AbfsRestOperation.java:191)

at org.apache.hadoop.fs.statistics.impl.IOStatisticsBinding.trackDurationOfInvocation(IOStatisticsBinding.java:464)

at org.apache.hadoop.fs.azurebfs.services.AbfsRestOperation.execute(AbfsRestOperation.java:189)

at org.apache.hadoop.fs.azurebfs.services.AbfsClient.getAclStatus(AbfsClient.java:911)

at org.apache.hadoop.fs.azurebfs.services.AbfsClient.getAclStatus(AbfsClient.java:892)

at org.apache.hadoop.fs.azurebfs.AzureBlobFileSystemStore.getIsNamespaceEnabled(AzureBlobFileSystemStore.java:358)

at org.apache.hadoop.fs.azurebfs.AzureBlobFileSystemStore.getFileStatus(AzureBlobFileSystemStore.java:932)

at org.apache.hadoop.fs.azurebfs.AzureBlobFileSystem.getFileStatus(AzureBlobFileSystem.java:609)

... 23 more

I tried the SAS token generated in Azure portal, it worked fine.

2

Answers

The root cause is that my Spark program cannot get or set AccessControl. I should not have used BlobSasToken or AccountSasBuilder because the blob container itself is not aware of what ACL is. Therefore, the SAS tokens generated by them naturally do not have ACL manipulation permissions.

With the help of @Venkatesan, I learned that I can also use DataLakeSasBuilder. DataLake follows the HDFS standard, so it is aware of what ACL is. However, the permission set used by @Venkatesan is DataLakeAccountSasPermissions, which does not include ManageAccessControl permission. The correct permission set is DataLakeFileSystemSasPermissions. After switching to this permission set, my program can work properly.

If you are using

Data-lake-gen2 accountwith a hierarchical namespace, you can use the Datalake package with the below code to create a SAS token using C#.Code:

Output:

I checked the URL with the image file it working successfully.

Browser:

Reference:

Use .NET to manage data in Azure Data Lake Storage Gen2 – Azure Storage | Microsoft Learn