I am working on a Retrieval-Augmented Generation (RAG) application that uses Azure OpenAI GPT4o for two types of API calls:

- Rephrasing the question (non-streaming call)

- Generating a response (streaming call, with stream=True)

I configured the azure-openai-emit-token-metric policy in Azure API Management (APIM) to estimate token usage. It works correctly for non-streaming API calls but does not capture token usage metrics for streaming responses.

I have the following in my Inbound Policy

<when condition="@(context.Request.Headers.GetValueOrDefault("Ocp-Apim-Subscription-Key") == "SERVICE_A_KEY")">

<azure-openai-emit-token-metric namespace="AzureOpenAI">

<dimension name="service" value="SERVICE_A" />

</azure-openai-emit-token-metric>

</when>

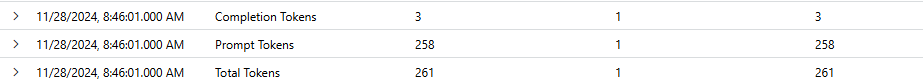

Now here’s the response I’m currently getting:

Currently only the query rephrasing (non streaming) part is getting logged. I want to also log the tokens consumed by the streaming response so we will have 3 more rows with response generation tokens.

I’m separately logging token usage by enabling the stream_options: {"include_usage": true} option in the OpenAI API, but I want to consolidate this logging within APIM using the azure-openai-emit-token-metric policy.

The official docs does say Certain Azure OpenAI endpoints support streaming of responses. When stream is set to true in the API request to enable streaming, token metrics are estimated.

Is it possible to make the azure-openai-emit-token-metric policy work for streaming responses for gpt-4o ?

Question posted in

Question posted in

2

Answers

According to this documentation the OpenAI models supported are

Chat completion:

gpt-3.5andgpt-4Completion :

gpt-3.5-turbo-instructEmbeddings :

text-embedding-3-large,text-embedding-3-small,text-embedding-ada-002So, use any one these models.

I have tried with

gpt-4oeven i did not got, then tried withgpt-4got the tokens in logs.Output:

Request i made

After successful request,

message output

next go to Trace and then Outbound

You will get the tokens usage details.

and the same is sent to logs.

There was an update announced at Ignite, which says GPT-4o models will support these policies, and the updates are rolling out to API Management throughout the end of 2024.

In short – it’s coming.

GPT-4o Support Announcement