Today is 8th of September 2023. I run Airflow in Containers. Worker, Scheduler, etc. are on.

I have the following DAG:

from airflow import DAG

from datetime import datetime

from airflow.providers.postgres.operators.postgres import PostgresOperator

with DAG('user_processing',

start_date=datetime(2023,10,12),

schedule_interval='@daily',

catchup=False) as dag:

create_table = PostgresOperator(

task_id='create_table',

postgres_conn_id='postgres',

sql='''

CREATE TABLE IF NOT EXISTS users (

firstname TEXT NOT NULL,

lastname TEXT NOT NULL,

country TEXT NOT NULL,

username TEXT NOT NULL,

password TEXT NOT NULL,

email TEXT NOT NULL

);

'''

)

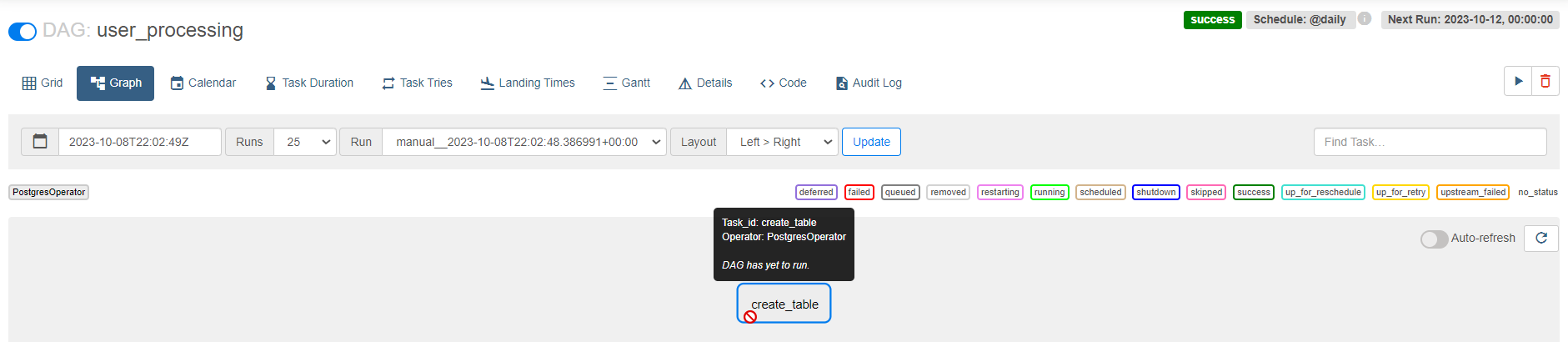

If I add start_date in the future and trigger a manual run, the task is marked as success, but is not run (takes 00:00:00 seconds to run and I made a mistake in SQL on purpose to check if it will error out).

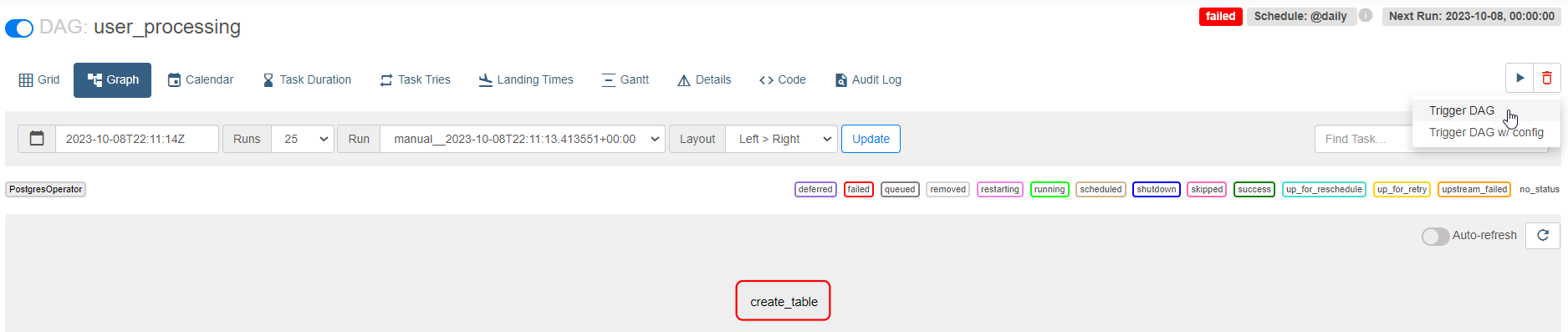

If I add start_date in the past or present (e.g: start_date=datetime(2022, 1, 1)), the task is marked as failed. Logs are empty. I am sure, there is no mistake in the task since I can run it from the Scheduler-1 container without any problems.

I am very confused what can be wrong. Please help

2

Answers

So, in the end the problem returned and I realised that I had permissions issues. In the beginning, when I tried to create DAGs in the /dags folder, I received an error with missing permissions. When running

ls -lacommand, it looked like this (ignore obfuscated part, it is really not relevant):So, to create DAG files, I ran this command: Now, depending on when you started Docker-compose and when you changed permissions, the DAGs might not run. In my case, sometimes it worked and sometimes not, I could not figure out the exact steps after which it stops working. However, if you encountered the problem that I described in this question, all you need to do is to change the permissions back and it will work. In my case it is:

Now, depending on when you started Docker-compose and when you changed permissions, the DAGs might not run. In my case, sometimes it worked and sometimes not, I could not figure out the exact steps after which it stops working. However, if you encountered the problem that I described in this question, all you need to do is to change the permissions back and it will work. In my case it is:

sudo chown -R betelgeitze ~/data_eng/airflow/dagsNow thels -lalooks like this:sudo chown -R 50000 ~/data_eng/airflow/dags(don't forget to add the name of your airflow user instead of 50000 and change the path to where your folder is:sudo chown -R <airflow username> <path>)For DAGs that are running daily I normally define the start_date argument as follows:

The DAG will run once when you deploy it and past executions will not be queued. Note that if the periodicity of your DAG is not daily you will need to adjust the number of days ago.

In addition, if you want to run the DAG on demand I would recommend you to set the schedule_interval to None instead of setting a future date in start_date, it makes no sense.