Everything has begun from Why bcrypt (Node.js) package causes "Docker Failed – bcrypt_lib.node: Exec format error" while many other packages are not in the same conditions? issue. Although it seems to be something wrong with this Node.js package, it was many references to wrong organizing of Docker project structure.

Please do not copy node_modules inside Docker. It decreases your build time and prevents this kind of errors from happening.

https://github.com/kelektiv/node.bcrypt.js/issues/824#issuecomment-677485592

The way you use docker containers is not recommended. Since you’re using a stock docker container, you might as well just run node on the host.

(By the way, what it the stock docker container?)

O’K, I am ready to rethink the organizing of my Docker project, but I will not reorganize it only because "everybody doing so" – I need to reach the deep understanding. The first question is what exactly wrong with my organizing? AFAIK what I am doing in ※ section is called binding mounting and it is the completely valid method of the exposing the files for the Docker container.

version: "3.5"

services:

FrontServer:

image: node:18-alpine

container_name: Example-Local-FrontServer

ports: [ "8080:8080" ]

# [ Theory ] Nodemon will not be found if invoke just "nodemon". See https://linuxpip.org/nodemon-not-found/

# [ Theory ] About -L flag: https://github.com/remy/nodemon/issues/1802

command: sh -c "cd var/www/example.com && node_modules/.bin/nodemon -L 03-LocalDevelopmentBuild/FrontServerEntryPoint.js --environment local"

depends_on: [ Database ]

# === ※ ================================================================

volumes:

- type: bind

source: .

target: /var/www/example.com

# =====================================================================

Database:

image: postgres

container_name: Example-Local-Database

ports: [ "${DATABASE_PORT}:${DATABASE_PORT}" ]

environment:

- "POSTGRES_PASSWORD=${DATABASE_PASSWORD}"

volumes:

- DatabaseData:/var/lib/postgresql/data

volumes:

DatabaseData:

name: Example-Local-DatabaseData

driver: local

Please note that this preset is actual only for local development mode and does not related with production. The main difference between this modes is the incremental building.

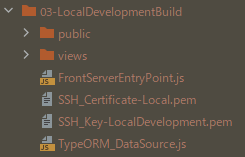

- The 03-LocalDevelopmentBuild directory is being fully generated – there is nothing that has been added there manually.

- The incremental building must work – the project build utility (based on Gulp + Webpack) must NOT be restarted manually each time when source file(s) has changed.

[ FAQ ] Why you need the "FrontServer" service in local development mode? You can use local Node.js as well

Because the local Node.js version could differ with one on the VPS, herewith could depend on specific project. AFAK the Docker has been designed exactly to solve this problem.

The only thing for which I use the local Node.js is the project building – for some reasons.

2

Answers

With the setup you show, you can also

and things will work as expected. You don’t need to figure out the Compose configuration, look up "bind mount" terminology, research the

nodemon -Loption, or ask Stack Overflow why filesystem synching isn’t working. Many of the questions I see in this space already have an array of host-based tools like an IDE and the Docker Desktop application, and there’s no particular reason to run the Node interpreter in a container while the rest of the stack is on the host.More broadly, I tend to recommend against bind mounts (or any sort of volume mounts) that inject the application source code into a container. If you’re planning to use Docker as part of an eventual deployment system, then the bind mount hides what’s actually in the image, and you’ll be deploying an untested image. I’ve also seen problems where the Dockerfile does additional setup that gets hidden by a bind mount, or where the wrong directory gets mounted, and things mysteriously stop working. Just removing the bind mount entirely avoids this class of problem.

The

bcryptissue you describe can result if you’re using a Linux container on a non-Linux host. In this case, thenode_modulesdirectory is different if you’re running a process in a container or on the host, and bind-mounting the host’s source tree into the container you can’t keep the wrong-architecturenode_modulesfrom coming along.The usual workaround is to use an anonymous volume to tell Docker that the

node_modulesdirectory is actually user data that needs to be preserved between runs, and take advantage of a copy-on-first-use behavior. This also means that Docker will completely ignore changes in thepackage.jsonfile even if you rebuild the image, and it can mean the application’s behavior is dependent on what this machine’snode_modulesvolume contains. This reintroduces the "works on my machine" problem that Docker generally tries to avoid.You should in fact use a

.dockerignorefile to avoid copying the host’snode_modulesdirectory into the image; but on the flip side, your Dockerfile should generallyRUN npm cito create its own correct-environmentnode_modulesdirectory based on yourpackage-lock.jsonfile.You have a Docker Compose file that sets up two services – one for a front server based on Node.js and another for a database based on Postgres.

The FrontServer service uses a binding mount to map the current directory on the host to

/var/www/example.comin the container. That allows the service to access the files in that directory and serves as a way to develop locally while having the code run within a Docker container.A possible issue is the binding mount of the entire project directory, including the

node_modulesfolder.When Node.js dependencies are installed, the binaries are often compiled for the specific system architecture they are being installed on. If the host machine and the Docker container have different architectures or different versions of system libraries, hence the problem you have with

bcrypt.Instead of bind-mounting the

node_modulesdirectory, or, as in David Maze‘s answer, moving the Node.js environment entirely to the host system, you could have Docker install the dependencies during the imagebuildstage.That would make sure the correct architecture binaries are used. Update your Docker Compose file and create a Dockerfile for your FrontServer service:

That would guarantee architecture consistency while providing flexibility in how you manage your Node.js environment and dependencies.

The dependencies would be installed within the Docker container that is based on the specified Node.js image (

node:18-alpine).Meaning: the

node_modulesdirectory is created in an environment that matches the one where the application will be running, which eliminates potential architecture mismatch issues.And this allows for a clean separation between the host and container environments, which is useful if there are multiple developers working on the project with varying local setups.

By defining the project in a

Dockerfileanddocker-compose.yaml, you are codifying the environment project, making it easy to share, replicate, and version control. However, this may add a level of indirection when compared to running Node.js directly on the host, which could potentially make certain tasks more cumbersome.A multi-stage build could enhance the setup by optimizing the image build process, especially if there are intermediate build steps or tools required that are not needed in the final image.

For example, if your project requires a build step with tools like a bundler or a compiler, a multi-stage build can help keep those tools out of the final image, reducing the image size and minimizing the attack surface.

The build stage is defined where dependencies are installed, and the application is built.

Then, a second stage is defined for running the application, where the built assets from the first stage are copied over. That results in a smaller final image as only the necessary files are included.