Packages installed by poetry significantly increases the image size when it’s built for amd64.

I’m building a docker image on my host machine(MacOS, M2 Pro), which I want to deploy to an EC2 instance. Normal build will make an image size of 2GB, which is good. But it will result in system compatibility issue when deployed on EC2: WARNING: The requested image's platform (linux/arm64/v8) does not match the detected host platform (linux/amd64/v3) and no specific platform was requested. So I am trying a build with buildx command. However, it results in whopping 13GB, even though all I changed was a build command. I’d like to know why and how to reduce the size.

Here is the Dockerfile:

FROM python:3.11-slim

# for -slim version (it breaks if you don't comment out && apt-get clean)

RUN apt-get update && apt-get install -y

gfortran

libopenblas-dev

liblapack-dev

&& apt-get clean

&& rm -rf /var/lib/apt/lists/*

# Set environment variables to make Python and Poetry play nice

ENV POETRY_VERSION=1.7.1

PYTHONUNBUFFERED=1

PYTHONDONTWRITEBYTECODE=1

EXPERIMENT_ID=$EXPERIMENT_ID

RUN_ID=$RUN_ID

## Install poetry

RUN pip install "poetry==$POETRY_VERSION"

## copy project requirement files here to ensure they will be cached.

WORKDIR /app

COPY pyproject.toml ./

RUN poetry config virtualenvs.create false

&& poetry install --no-interaction --no-dev --no-ansi --verbose

&& poetry cache clear pypi --all

With this pyproject.toml, you can reproduce the build.

[tool.poetry]

name = "malicious-url"

version = "0.1.0"

description = ""

authors = ["Makoto1021 <[email protected]>"]

readme = "README.md"

[tool.poetry.dependencies]

python = "^3.11"

numpy = "^1.26.4"

tld = "^0.13"

fuzzywuzzy = "^0.18.0"

scikit-learn = "^1.4.1.post1"

pandas = "^2.2.1"

mlflow = {extras = ["pipelines"], version = "^2.11.3"}

xgboost = "^2.0.3"

python-dotenv = "^1.0.1"

imblearn = "^0.0"

torch = "^2.2.2"

flask = "^3.0.3"

googlesearch-python = "^1.2.3"

whois = "^1.20240129.2"

nltk = "^3.8.1"

[tool.poetry.group.dev.dependencies]

ipykernel = "^6.29.3"

tldextract = "^5.1.2"

[build-system]

requires = ["poetry-core"]

build-backend = "poetry.core.masonry.api"

And this command will build a 2GB image.

docker build -f ./docker/Dockerfile

-t malicious-url-prediction-img:v1 .

And this will make a 13GB image

docker buildx build --platform linux/amd64 -f ./docker/Dockerfile

-t malicious-url-prediction-img:v1-amd64 .

The image size stays small if I remove the RUN command poetry config virtualenvs.create..., even if I build the image for amd64. So I assume that poetry is causing this problem. However, it still is weird to have such a big difference in size by just changing the build context.

I went inside the huge image with /bin/bash and du command, and I found out that the total used space is just around 2MB. So it indicates that the large Docker image size issue might not be directly related to the files I’m adding to the image but rather to the base image and the layers created by package installations and configurations.

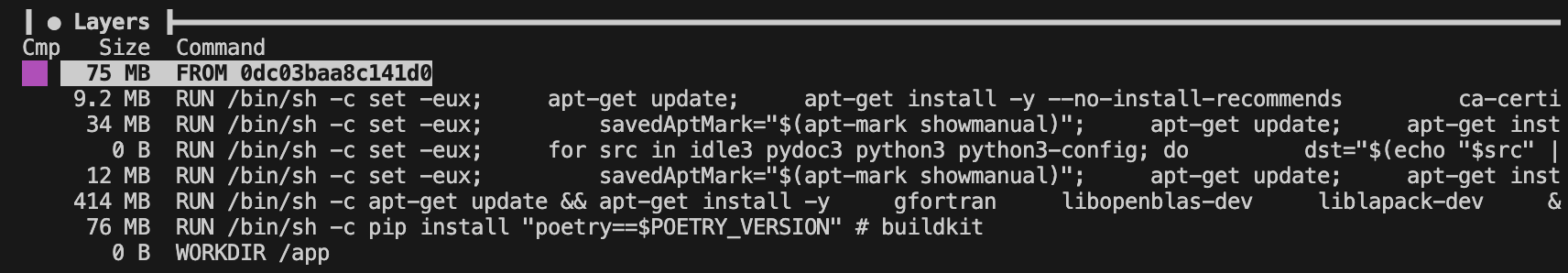

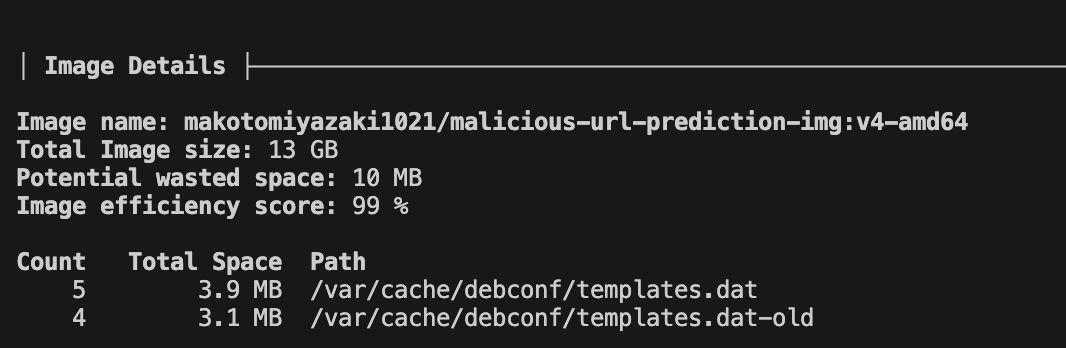

I also used dive to investigate the space usage, but doesn’t really tell what’s wrong.

I have a feeling that it’s coming from poetry Any advice?

FYI, this is how I run the container.

docker run --rm -p 7070:5000 -v $(pwd)/logs:/app/logs malicious-url-prediction-img:v1-amd64

EDITED:

- changed Dockerfile to minimal example

- added myproject.toml to reproduce the build

- added my investigation on poetry

Question posted in

Question posted in

3

Answers

The problem is builds.

When you run AMD64 chances area there are already precompiled wheels you can use, so there’s no need to build anything. When you’re using ARM64 there are often a lot less wheels available, requiring you to build the project.

Builds take up space- they download libraries, compile artifacts, and link things together.

What you should do instead is use a multi stage build. Run the installation in one stage of the build process, and then move your created files into a new container.

For example (this isn’t tested, but is based on your file and should work with some tweaks):

This method is used for all of the Multi-Py projects (disclaimer: I’m the author of these), so you can look there for examples.

Some ISAs may produce smaller/larger builds. But increase amount in size in your case isn’t normal. Here I propose an small refactoring in your Dockerfile.

I use this trick allways, but I didn’t test here.

You need

gfortranand some other packages to build your python packages. After build, they aren’t necessary and you can uninstall them.But above codes does not reduce your docker images, since build dependencies exists in above layers. You must change above code to:

This will decrease your image size considerably.

apt,pipand … (aptby default, deletes downloaded.debfiles however)downloads files in cache directories. You can add--mount=type=cacheto yourRUNargument to cache them in seperate cache volumes, not in your images.So here is your refactored

Dockerfile(I addliblapackandlibopenblasso they must stay afterapt autoremove):the difference between the arm64 and amd64 image size comes down to a conditional architecture-dependent dependency

torchonamd64pulls in a series ofnvidia*dependencies to support gpu development — but does not pull those in on other architectures: https://github.com/pytorch/pytorch/blob/7a6edb0b6644eb2b28650ea3be1c806e4a57e351/.github/workflows/generated-linux-binary-manywheel-main.yml#L51you can see this reflected in the wheel metadata from pypi:

and in the

aarch64wheel as well:these dependencies are not pulled in on arm64 (aarch64):

(there may be more as well, I only traced

torchwhich I’m familiar with its dependency problem as I couldn’t build your docker image since I ran out of disk space!)