Below are the Varnish container and its log and application container and its log.

before i separate varnish into container the varnish was working fine within application container with the same below setup, but varnish throws 503/500 error after i separate into a container

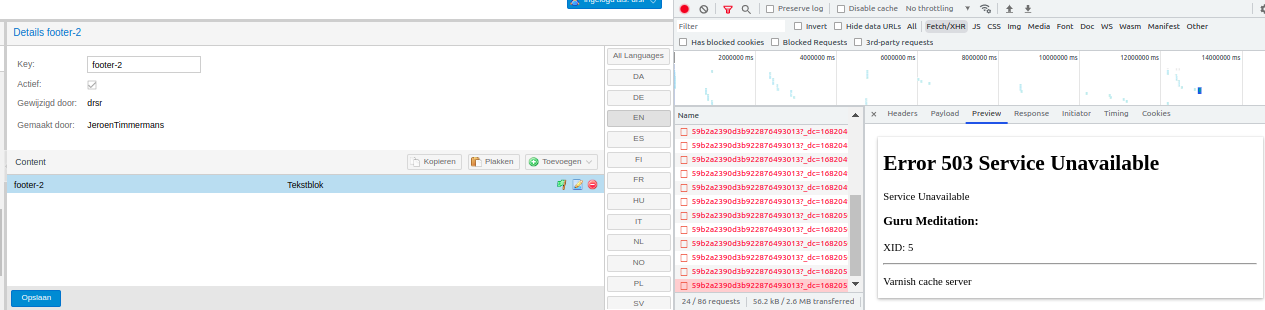

the below log, i see when i try to update the content on application.

Varnish container IP : 172.100.0.10 exposed port 8443,

Application container IP: 172.100.0.2

Varnish Container Log

/etc/varnish # varnishlog

* << Request >> 5

- Begin req 4 rxreq

- Timestamp Start: 1682051211.655401 0.000000 0.000000

- Timestamp Req: 1682051211.655475 0.000073 0.000073

- VCL_use boot

- ReqStart 172.100.0.2 44296 http

- ReqMethod PUT

- ReqURL /application/shared-content/59b2a2390d3b922876493013?_dc=1682051211645

- ReqProtocol HTTP/1.1

- ReqHeader Host: cms-application.dev.abc.eu

- ReqHeader sec-ch-ua: "Chromium";v="112", "Google Chrome";v="112", "Not:A-Brand";v="99"

- ReqHeader Content-Type: application/json

- ReqHeader X-Requested-With: XMLHttpRequest

- ReqHeader sec-ch-ua-mobile: ?0

- ReqHeader User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36

- ReqHeader sec-ch-ua-platform: "Linux"

- ReqHeader Accept: */*

- ReqHeader Origin: https://cms-application.dev.abc.eu

- ReqHeader Sec-Fetch-Site: same-origin

- ReqHeader Sec-Fetch-Mode: cors

- ReqHeader Sec-Fetch-Dest: empty

- ReqHeader Referer: https://cms-application.dev.abc.eu/application/

- ReqHeader Accept-Encoding: gzip, deflate, br

- ReqHeader Accept-Language: en-GB,en;q=0.9

- ReqHeader Cookie: Laminas_Auth=ctmm16mcsu765iuljdsvlf90t3

- ReqHeader X-Forwarded-Port: 443

- ReqHeader X-Forwarded-Proto: https

- ReqHeader X-Forwarded-For: 172.100.0.1

- ReqHeader X-Forwarded-Host: cms-application.dev.abc.eu

- ReqHeader X-Forwarded-Server: proxy.dev.abc.eu

- ReqHeader Connection: Keep-Alive

- ReqHeader Content-Length: 1282

- ReqUnset X-Forwarded-For: 172.100.0.1

- ReqHeader X-Forwarded-For: 172.100.0.1, 172.100.0.2

- ReqHeader Via: 1.1 varnish-container (Varnish/7.2)

- VCL_call RECV

- VCL_return pass

- VCL_call HASH

- VCL_return lookup

- VCL_call PASS

- VCL_return fetch

- Link bereq 6 pass

- Storage malloc Transient

- Timestamp ReqBody: 1682051211.656364 0.000962 0.000889

- Timestamp Fetch: 1682051217.350135 5.694733 5.693770

- RespProtocol HTTP/1.1

- RespStatus 503

- RespReason Service Unavailable

- RespHeader Date: Fri, 21 Apr 2023 04:26:57 GMT

- RespHeader Server: Varnish

- RespHeader X-Varnish: 5

- VCL_call SYNTH

- RespHeader Content-Type: text/html; charset=utf-8

- RespHeader Retry-After: 5

- VCL_return deliver

- Timestamp Process: 1682051217.350186 5.694785 0.000051

- RespHeader Content-Length: 275

- Storage malloc Transient

- Filters

- RespHeader Connection: keep-alive

- Timestamp Resp: 1682051217.350264 5.694862 0.000077

- ReqAcct 958 1282 2240 205 275 480

- End

* << Session >> 4

- Begin sess 0 HTTP/1

- SessOpen 172.100.0.2 44296 http 172.100.0.10 80 1682051211.655293 24

- Link req 5 rxreq

- SessClose RX_CLOSE_IDLE 10.697

- End

Application Container Log

[root@application-container www]# tail -f /var/log/httpd/ssl_request_log

[21/Apr/2023:06:26:51 +0200] 172.100.0.1 TLSv1.2 ECDHE-RSA-AES128-GCM-SHA256 "PUT /application/shared-content/59b2a2390d3b922876493013?_dc=1682051211645 HTTP/1.1" 275

default.vcl

# Marker to tell the VCL compiler that this VCL has been adapted to the

# new 4.0 format.

vcl 4.0;

import std;

# Default backend definition. Set this to point to your content server.

backend default {

.host = "application-container";

.port = "8080";

}

backend blogproxy {

.host = "ip";

.port = "80";

}

acl abc {

"application-container";

}

acl abc_loadbalancer {

"application-container";

}

sub vcl_recv {

# Use true client ip from Akamai CDN

if (req.http.True-Client-IP) {

set req.http.X-Forwarded-For = req.http.True-Client-IP;

}

if (req.restarts == 0) {

if (req.http.X-Forwarded-For && client.ip !~ abc_loadbalancer) {

set req.http.X-Forwarded-For = req.http.X-Forwarded-For + ", " + client.ip;

} elseif (client.ip !~ abc_loadbalancer) {

set req.http.X-Forwarded-For = client.ip;

}

}

if(req.method == "BAN") {

if (client.ip !~ abc) {

return(synth(405, "Not allowed"));

}

ban("obj.http.x-url == " + req.url);

return(synth(200, "Ban added"));

}

if (req.http.host ~ "^nl-s_app" && req.url ~ "^/blog/") {

set req.backend_hint = blogproxy;

set req.http.host = "blogproxy.s_app.nl";

set req.http.X-Forwarded-Host = "www.s_app.nl";

return(pass);

}

if (req.http.host ~ "^www.s_app.de" && req.url ~ "^/blog/") {

set req.backend_hint = blogproxy;

set req.http.host = "blogproxy.s_app.nl";

set req.http.X-Forwarded-Host = "www.s_app.de";

return(pass);

}

if (req.http.host ~ "^www.s_app.fr" && req.url ~ "^/blog/") {

set req.backend_hint = blogproxy;

set req.http.host = "blogproxy.s_app.nl";

set req.http.X-Forwarded-Host = "www.s_app.fr";

return(pass);

}

if (req.http.host ~ "^www.s_app.co.uk" && req.url ~ "^/blog/") {

set req.backend_hint = blogproxy;

set req.http.host = "blogproxy.s_app.nl";

set req.http.X-Forwarded-Host = "www.s_app.co.uk";

return(pass);

}

if (req.http.host ~ "^nl-application" && req.url ~ "^/blog/") {

set req.backend_hint = blogproxy;

set req.http.host = "blogproxy.application.nl";

set req.http.X-Forwarded-Host = "www.application.nl";

return(pass);

}

if (req.http.host ~ "^de-application" && req.url ~ "^/blog/") {

set req.backend_hint = blogproxy;

set req.http.host = "blogproxy.application.nl";

set req.http.X-Forwarded-Host = "www.application.de";

return(pass);

}

if (req.http.host ~ "^fr-application" && req.url ~ "^/blog/") {

set req.backend_hint = blogproxy;

set req.http.host = "blogproxy.application.nl";

set req.http.X-Forwarded-Host = "www.application.fr";

return(pass);

}

if (req.http.host ~ "^en-application" && req.url ~ "^/blog/") {

set req.backend_hint = blogproxy;

set req.http.host = "blogproxy.application.nl";

set req.http.X-Forwarded-Host = "www.application.co.uk";

return(pass);

}

if (req.http.url ~ "^/xml.*") {

return (pass);

}

if (req.http.host ~ "^api") {

return (pipe);

}

# Only bypass cache for development

if (req.http.Cache-Control ~ "(private|no-cache|no-store)" || req.http.Pragma == "no-cache") {

return (pipe);

}

if (

req.method != "GET" &&

req.method != "HEAD" &&

req.method != "PUT" &&

req.method != "POST" &&

req.method != "TRACE" &&

req.method != "OPTIONS" &&

req.method != "DELETE") {

# Non-RFC2616 or CONNECT which is weird.

return (pass);

}

# Never cache POST or HEAD requests

if (

req.method == "HEAD" ||

req.method == "POST" ||

req.method == "PUT") {

return (pass);

}

# Set a header announcing Surrogate Capability to the origin

if (req.http.Surrogate-Control ~ "contentx3Dx22ESI/1.0x22x3BAkamai") {

set req.http.Surrogate-Capability = "akamai=ESI/1.0";

} else {

set req.http.Surrogate-Capability = "varnish=ESI/1.0";

}

# Skip Varnish for the CMS

if (req.http.host ~ "^cms-application") {

return (pass);

}

# Sync jobs should also not use Varnish

if (req.url ~ "sync") {

return (pass);

}

if (req.url ~ "logout" || req.url ~ "callback" || req.url ~ "account" || req.url ~ "/affiliate/") {

return (pass);

}

# Normalize the accept encoding header

call normalize_accept_encoding;

# Strip cookies from static assets

if (req.url ~ "(?i).(css|js|txt|xml|bmp|png|gif|jpeg|jpg|svg|ico|mp3|mp4|swf|flv|ttf|woff|pdf)(?.*)?$") {

unset req.http.Cookie;

}

# Serving ESI

if (req.esi_level > 0) {

set req.http.X-Esi = 1;

set req.http.X-Esi-Parent = req_top.url;

} else {

unset req.http.X-Esi;

}

# Strip not allowed cookies

call strip_not_allowed_cookies;

# Strip out all Google query parameters

call strip_google_query_params;

# Strip hash, server doesn't need it.

if (req.url ~ "#") {

set req.url = regsub(req.url, "#.*$", "");

}

# if (req.http.X-Forwarded-For ~ "80.95.169.59") {

# if (client.ip ~ abc) {

# Enable the feature toggle button via cookie

# set req.http.Cookie = "abcToggle-abc-feature-toggles=true;" + req.http.Cookie;

# }

return (hash);

}

sub vcl_backend_response {

set beresp.http.x-url = bereq.url;

set beresp.http.x-host = bereq.http.host;

if (beresp.status == 500 || beresp.status == 502 || beresp.status == 503 || beresp.status == 504) {

return (abandon);

}

# Stop Caching 302 or 301 or 303 redirects

if (beresp.status == 302 || beresp.status == 301 || beresp.status == 303) {

set beresp.ttl = 0s;

set beresp.uncacheable = true;

return (deliver);

}

# Don't cache 404 responses

if ( beresp.status == 404 ) {

set beresp.ttl = 0s;

set beresp.uncacheable = true;

return (deliver);

}

# Set cache-control headers for 410

if (beresp.status == 410) {

set beresp.ttl = 2d;

}

if (bereq.http.Surrogate-Control ~ "contentx3Dx22ESI/1.0x22x3BAkamai") {

set beresp.do_esi = false;

set beresp.http.X-Esi-Processor = bereq.http.Surrogate-Control;

} elseif (beresp.http.Surrogate-Control ~ "ESI/1.0") {

unset beresp.http.Surrogate-Control;

set beresp.do_esi = true;

set beresp.http.X-Esi-Processor = bereq.http.Surrogate-Control;

}

unset beresp.http.Vary;

# Strip cookies from static assets

if (bereq.url ~ ".(css|js|txt|xml|bmp|png|gif|jpeg|jpg|svg|ico|mp3|mp4|swf|flv|ttf|woff|pdf)$") {

unset beresp.http.Set-cookie;

}

# Mark as "Hit-For-Pass" for the next 5 minutes

# Zend-Auth (EC session handling) cookie isset, causing no page to be cached anymore.

# if (beresp.ttl <= 0s || beresp.http.Set-Cookie || beresp.http.Vary == "*") {

if (beresp.ttl <= 0s || beresp.http.Vary == "*") {

set beresp.uncacheable = true;

set beresp.ttl = 300s;

}

# Grace: when several clients are requesting the same page Varnish will send one request to the webserver and

# serve old cache to the others for x secs

set beresp.grace = 30s;

return (deliver);

}

sub vcl_deliver {

# Add a header indicating the response came from Varnish, only for ABC IP addresses

# if (std.ip(regsub(req.http.X-Forwarded-For, "[, ].*$", ""), "0.0.0.0") ~ abc) {

set resp.http.X-Varnish-Client-IP = client.ip;

set resp.http.X-Varnish-Server-IP = server.ip;

set resp.http.X-Varnish-Local-IP = local.ip;

set resp.http.X-Varnish-Remote-IP = remote.ip;

set resp.http.X-Varnish-Forwarded-For = req.http.X-Forwarded-For;

set resp.http.X-Varnish-Webserver = "abc-cache-dev";

set resp.http.X-Varnish-Cache-Control = resp.http.Cache-Control;

set resp.http.X-Edge-Control = resp.http.Edge-Control;

if (resp.http.x-varnish ~ " ") {

set resp.http.X-Varnish-Cache = "HIT";

set resp.http.X-Varnish-Cached-Hits = obj.hits;

} else {

set resp.http.X-Varnish-Cached = "MISS";

}

# Add a header indicating the feature toggle status

set resp.http.X-Varnish-FeatureToggle = req.http.FeatureToggle;

#} else {

# unset resp.http.x-varnish-tags;

#}

# strip host and url headers, no need to send it to the client

unset resp.http.x-url;

unset resp.http.x-host;

return (deliver);

}

# strip host and url headers, no need to send it to the client

sub strip_not_allowed_cookies {

if (req.http.Cookie) {

set req.http.Cookie = ";" + req.http.Cookie;

set req.http.Cookie = regsuball(req.http.Cookie, "; +", ";");

set req.http.Cookie = regsuball(req.http.Cookie, ";(abcToggle-[^;]*)=", "; 1=");

# set req.http.Cookie = regsuball(req.http.Cookie, ";(redirect_url)=", "; 1=");

set req.http.Cookie = regsuball(req.http.Cookie, ";[^ ][^;]*", "");

set req.http.Cookie = regsuball(req.http.Cookie, "^[; ]+|[; ]+$", "");

if (req.http.Cookie == "") {

unset req.http.Cookie;

}

}

}

# Remove the Google Analytics added parameters, useless for our backend

sub strip_google_query_params {

if (req.url ~ "(?|&)(utm_source|utm_medium|utm_campaign|gclid|cx|ie|cof|siteurl|_ga|PHPSESSID)=" && req.url !~ "(tradedoubler|index.html|deeplink|affiliate)" && req.url !~ "^/[0-9]{6}") {

set req.url = regsuball(req.url, "&(utm_source|utm_medium|utm_campaign|gclid|cx|ie|cof|siteurl|_ga|PHPSESSID)=([A-z0-9_-.%25]+)", "");

set req.url = regsuball(req.url, "?(utm_source|utm_medium|utm_campaign|gclid|cx|ie|cof|siteurl|_ga|PHPSESSID)=([A-z0-9_-.%25]+)", "?");

set req.url = regsub(req.url, "?&", "?");

set req.url = regsub(req.url, "?$", "");

}

}

# Normalize the accept-encoding header to minimize the number of cache variations

sub normalize_accept_encoding {

if (req.http.Accept-Encoding) {

if (req.url ~ ".(jpg|png|gif|gz|tgz|bz2|tbz|mp3|ogg)$") {

# Skip, files are already compressed

unset req.http.Accept-Encoding;

} elseif (req.http.Accept-Encoding ~ "gzip") {

set req.http.Accept-Encoding = "gzip";

} elsif (req.http.Accept-Encoding ~ "deflate") {

set req.http.Accept-Encoding = "deflate";

} else {

# unknown algorithm

unset req.http.Accept-Encoding;

}

}

}

sub vcl_hash {

# Add the feature toggles to the hash

if (req.http.Cookie ~ "abcToggle-") {

set req.http.FeatureToggle = regsuball(req.http.cookie, "(abcToggle-[^;]*)", "1");

hash_data(req.http.FeatureToggle);

}

if (req.http.X-Akamai-Staging ~ "ESSL") {

hash_data(req.http.x-akamai-staging);

}

# When the ESI handling is not done by Akamai, create a new cache set, because varnish will process the ESI tags.

if (req.http.Surrogate-Control !~ "contentx3Dx22ESI/1.0x22x3BAkamai") {

hash_data("varnish-handles-ESI");

}

if (req.http.Cookie ~ "redirect_url") {

hash_data("redirect_url");

}

hash_data(req.http.X-Forwarded-Proto);

}

Latest findings after a debug ofcode base

while (!feof($this->socket)) {

if (($response = fgets($this->socket, 1024)) === false) {

$metaData = stream_get_meta_data($this->socket);

if ($metaData['timed_out']) {

throw new RuntimeException('Varnish CLI timed out');

}

throw new RuntimeException('Unable to read from Varnish CLI');

}

if (strlen($response) === 13 && preg_match('/^(d{3}) (d+)/', $response, $matches)) {

$statusCode = (int) $matches[1];

$responseLength = (int) $matches[2];

break;

}

}

fgets($this->socket, 1024) return values incase of varnish installed as a service in application container

fgets($this->socket, 1024) return false incase of varnish installed as a seperate container

docker-compose.yml

version: '3.7'

services:

dev:

image: xxxxxxx/dev:centos7

container_name: dev

hostname: dev

volumes:

- ${HOST_BIND_MOUNT_DIR}:${CONTAINER_MOUNT_DIR}

- /sys/fs/cgroup:/sys/fs/cgroup:ro

privileged: true

networks:

dev-online:

ipv4_address: 172.100.0.2

ports:

- 443:443

#- 6082:6082

#- 9001:9001

#- 5672:5672

#- 15672:15672

- 29015:29015

extra_hosts:

- dev:127.0.0.1

- dev-mysql:172.100.0.3

- dev-mongo-3:172.100.0.4

- dev-mongo-4:172.100.0.5

- dev-solr-6:172.100.0.6

- dev-solr-8:172.100.0.7

- dev-rethinkdb:172.100.0.8

- dev-memcached:172.100.0.9

- dev-varnish:172.100.0.10

- dev-rabbitmq:172.100.0.11

dev-mysql:

image: xxxxxxx/mysql-5.6:dev

container_name: dev-mysql

hostname: dev-mysql

user: "${V_UID}:${V_GID}"

volumes:

- ${HOST_BIND_MOUNT_DIR_MYSQL}:${CONTAINER_MOUNT_DIR_MYSQL}

networks:

dev-online:

ipv4_address: 172.100.0.3

ports:

- 3306:3306

environment:

- MYSQL_ROOT_PASSWORD:${MYSQL_ROOT_PASSWORD}

- MYSQL_DATABASE:${MYSQL_DATABASE}

extra_hosts:

- dev:172.100.0.2

- dev-mysql:127.0.0.1

- dev-mongo-3:172.100.0.4

- dev-mongo-4:172.100.0.5

- dev-solr-6:172.100.0.6

- dev-solr-8:172.100.0.7

- dev-rethinkdb:172.100.0.8

- dev-memcached:172.100.0.9

- dev-varnish:172.100.0.10

- dev-rabbitmq:172.100.0.11

dev-mongo-3:

image: xxxxxxx/mongo-3.0:dev

container_name: dev-mongo-3

hostname: dev-mongo-3

volumes:

- ${HOST_BIND_MOUNT_DIR_MONGO_3}:${CONTAINER_MOUNT_DIR_MONGO}

privileged: true

networks:

dev-online:

ipv4_address: 172.100.0.4

ports:

- 27017:27017

environment:

- MYSQL_ROOT_PASSWORD="${MYSQL_ROOT_PASSWORD}"

- MYSQL_DATABASE="${MYSQL_DATABASE}"

extra_hosts:

- dev:172.100.0.2

- dev-mysql:172.100.0.3

- dev-mongo-3:127.0.0.1

- dev-mongo-4:172.100.0.5

- dev-solr-6:172.100.0.6

- dev-solr-8:172.100.0.7

- dev-rethinkdb:172.100.0.8

- dev-memcached:172.100.0.9

- dev-varnish:172.100.0.10

- dev-rabbitmq:172.100.0.11

dev-mongo-4:

image: xxxxxxx/mongo-4.4:dev

container_name: dev-mongo-4

hostname: dev-mongo-4

user: "${V_UID}:${V_GID}"

volumes:

- ${HOST_BIND_MOUNT_DIR_MONGO_4}:${CONTAINER_MOUNT_DIR_MONGO}

privileged: true

networks:

dev-online:

ipv4_address: 172.100.0.5

ports:

- 27018:27017

environment:

- MYSQL_ROOT_PASSWORD="${MYSQL_ROOT_PASSWORD}"

- MYSQL_DATABASE="${MYSQL_DATABASE}"

extra_hosts:

- dev:172.100.0.2

- dev-mysql:172.100.0.3

- dev-mongo-3:172.100.0.4

- dev-mongo-4:127.0.0.1

- dev-solr-6:172.100.0.6

- dev-solr-8:172.100.0.7

- dev-rethinkdb:172.100.0.8

- dev-memcached:172.100.0.9

- dev-varnish:172.100.0.10

- dev-rabbitmq:172.100.0.11

dev-solr-6:

image: xxxxxxx/solr-6.6:stable

container_name: dev-solr-6

hostname: dev-solr-6

networks:

dev-online:

ipv4_address: 172.100.0.6

ports:

- 8983:8983

extra_hosts:

- dev:172.100.0.2

- dev-mysql:172.100.0.3

- dev-mongo-3:172.100.0.4

- dev-mongo-4:172.100.0.5

- dev-solr-6:127.0.0.1

- dev-solr-8:172.100.0.7

- dev-rethinkdb:172.100.0.8

- dev-memcached:172.100.0.9

- dev-varnish:172.100.0.10

- dev-rabbitmq:172.100.0.11

dev-solr-8:

image: xxxxxxx/solr-8.6:dev

container_name: dev-solr-8

hostname: dev-solr-8

user: "${V_UID}:${V_GID}"

volumes:

- ${HOST_BIND_MOUNT_DIR_SOLR}:${CONTAINER_MOUNT_DIR_SOLR_8}

networks:

dev-online:

ipv4_address: 172.100.0.7

ports:

- 8984:8983

environment:

- SOLR_HEAP=2g

extra_hosts:

- dev:172.100.0.2

- dev-mysql:172.100.0.3

- dev-mongo-3:172.100.0.4

- dev-mongo-4:172.100.0.5

- dev-solr-6:172.100.0.6

- dev-solr-8:127.0.0.1

- dev-rethinkdb:172.100.0.8

- dev-memcached:172.100.0.9

- dev-varnish:172.100.0.10

- dev-rabbitmq:172.100.0.11

dev-rethinkdb:

image: rethinkdb

container_name: dev-rethinkdb

hostname: dev-rethinkdb

user: "${V_UID}:${V_GID}"

volumes:

- ${HOST_BIND_MOUNT_DIR_RETHINKDB}:${CONTAINER_MOUNT_DIR_RETHINKDB}

networks:

dev-online:

ipv4_address: 172.100.0.8

ports:

- 28015:28015

- 8080:8080

extra_hosts:

- dev:172.100.0.2

- dev-mysql:172.100.0.3

- dev-mongo-3:172.100.0.4

- dev-mongo-4:172.100.0.5

- dev-solr-6:172.100.0.6

- dev-solr-8:172.100.0.7

- dev-rethinkdb:127.0.0.1

- dev-memcached:172.100.0.9

- dev-varnish:172.100.0.10

- dev-rabbitmq:172.100.0.11

dev-memcached:

image: memcached

container_name: dev-memcached

hostname: dev-memcached

user: "${V_UID}:${V_GID}"

networks:

dev-online:

ipv4_address: 172.100.0.9

ports:

- 11211:11211

extra_hosts:

- dev:172.100.0.2

- dev-mysql:172.100.0.3

- dev-mongo-3:172.100.0.4

- dev-mongo-4:172.100.0.5

- dev-solr-6:172.100.0.6

- dev-solr-8:172.100.0.7

- dev-rethinkdb:172.100.0.8

- dev-memcached:127.0.0.1

- dev-varnish:172.100.0.10

- dev-rabbitmq:172.100.0.11

dev-varnish:

image: varnish:7.3.0-alpine

container_name: dev-varnish

hostname: dev-varnish

user: '0'

volumes:

- "./default.vcl:/etc/varnish/default.vcl:ro"

- "./secret:/etc/varnish/secret"

ports:

- "8081:80"

tmpfs:

- /var/lib/varnish/varnishd:exec

networks:

dev-online:

ipv4_address: 172.100.0.10

extra_hosts:

- dev:172.100.0.2

- dev-mysql:172.100.0.3

- dev-mongo-3:172.100.0.4

- dev-mongo-4:172.100.0.5

- dev-solr-6:172.100.0.6

- dev-solr-8:172.100.0.7

- dev-rethinkdb:172.100.0.8

- dev-varnish:127.0.0.1

- dev-memcached:172.100.0.9

- dev-rabbitmq:172.100.0.11

dev-rabbitmq:

image: rabbitmq:3.9.27-management-alpine

container_name: dev-rabbitmq

environment:

RABBITMQ_DEFAULT_PASS: ${RABBITMQ_DEFAULT_PASS}

RABBITMQ_DEFAULT_USER: ${RABBITMQ_DEFAULT_USER}

RABBITMQ_DEFAULT_VHOST: ${RABBITMQ_DEFAULT_VHOST}

volumes:

- ${HOST_BIND_MOUNT_DIR_RMQ}/data:${CONTAINER_MOUNT_DIR_RMQ}

- ${HOST_BIND_MOUNT_DIR_RMQ}/log:/var/log/rabbitmq

ports:

- "5672:5672"

- "15672:15672"

networks:

dev-online:

ipv4_address: 172.100.0.11

extra_hosts:

- dev:172.100.0.2

- dev-mysql:172.100.0.3

- dev-mongo-3:172.100.0.4

- dev-mongo-4:172.100.0.5

- dev-solr-6:172.100.0.6

- dev-solr-8:172.100.0.7

- dev-rethinkdb:172.100.0.8

- dev-memcached:172.100.0.9

- dev-varnish:172.100.0.10

- dev-rabbitmq:127.0.0.1

networks:

dev-online:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.100.0.0/24

2

Answers

Hum…

did you see that your application is throwing a 500 HTTP error ?

The application container log is probably not the log that helps in your case.. it refers to an HTTP connection (and you probably don’t have HTTPS between varnish and your application)… and the requesting IP (172.100.0.1) is not the varnish container IP (172.100.0.10). you’re probably looking into the proxy container log, that appears to be on top of your varnish configuration.

You need to make Varnish CLI traffic available from outside the

dev-varnishcontainer.You can achieve this by adding the following line to the

dev-varnishcontainer in yourdocker-compose.ymlfile:This will open up port

6082on all network interfaces for CLI traffic. The CLI traffic will be protected through the/etc/varnish/secretfile which is already mounted in the container.After having enabled global CLI support in your Docker setup, you can connect to the CLI endpoint from your application with the following parameters:

172.100.0.106082Please ensure that the

secretfile is also mounted in yourdevcontainer and that your code can handle the authentication challenge that leverages the secret file.