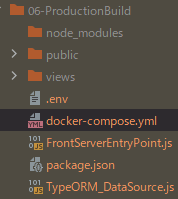

With below preset:

version: "3.5"

services:

FronServer:

image: node:16-alpine

container_name: Example-Production-FrontServer

working_dir: /var/www/example.com

volumes:

- .:/var/www/example.com

- FrontServerDependencies:/var/www/example.com/node_modules:nocopy

command: sh -c "echo 'Installing dependencies ...'

&& npm install --no-package-lock

&& node FrontServerEntryPoint.js --environment production"

ports: [ "8080:8080" ]

environment:

- DATABASE_HOST=Database

depends_on: [ Database ]

Database:

image: postgres

container_name: Example-Production-Database

ports: [ "5432:5432" ]

environment:

- POSTGRES_PASSWORD=pass1234

volumes:

- DatabaseData:/data/example.com

volumes:

FrontServerDependencies: { driver: "local" }

DatabaseData: {}

the launching of the docker-compose causes the empty node_modules directory on local machine:

How to make it not appear on host machine without additional Dockerfile?

In my case, I need the installed node_modules lost after container has been stopped.

Maybe tmpfs is what I need but have not found the example suited with my case.

2

Answers

What you want is an anonymous volume mount. This will keep your host and container versions of

node_modulesseparate which is especially important if you have any native built dependencies and your architectures differ (eg Linux container, MacOS or Windows host).I would also strongly suggest you add a

Dockerfilefor your Node app to install dependencies during the build stage. Otherwise you’ll be installing them every time you start the service.Docker images are a core part of Docker, and I’d embrace them here. It seems like an image captures your requirement of the

node_modulesdirectory not existing on the host and being rebuilt as necessary.It’s important to not use

volumes:to inject code into the container. It’s especially important to not use an anonymous volume or any other kind of volume fornode_modules: while Docker copies content from an image into a named or anonymous volume on first use, it has no way to update that content, and you’ll be stuck with a specific version of your module tree.A Node

Dockerfileis fairly boilerplate, and mirrors many lines you already have in the Compose file:Also make sure you have a

.dockerignorefile that includes the lineto keep the host’s library tree out of the image.

Since all of these settings are in the image, you can remove them from the Compose file. That can be trimmed down to

There are two ways to use this setup. If you run

it will do exactly what you initially requested: it will build an isolated copy of the

node_modulestree in the image, separate from any files that exist on the host. The specific ordering in theDockerfilecombined with Docker’s layer-caching mechanism means that the expensive library installation won’t happen if neither thepackage.jsonnorpackage-lock.jsonfiles have changed.You can also run

to run Node on your host system, but with the database in Docker. This still captures many of the benefits of Docker (especially, it is very easy to completely reset your database, and your per-project database is isolated from other projects on your local system) but you also get the simplicity of a local development environment (no special IDE support required, live reloading will work reliably, tools exist locally when you need to run them).