When I build the Dockerfile below by running the command manually at a Windows notebook

docker build -f Dockerfile --no-cache --tag my-postgis:16-3.4

--build-arg DOWNLOAD_URLS="https://download.geofabrik.de/europe/germany/berlin-latest.osm.pbf https://download.geofabrik.de/europe/germany/hamburg-latest.osm.pbf"

--build-arg OSM_PASSWORD=osm_password

--build-arg POSTGIS_TAG=16-3.4 .

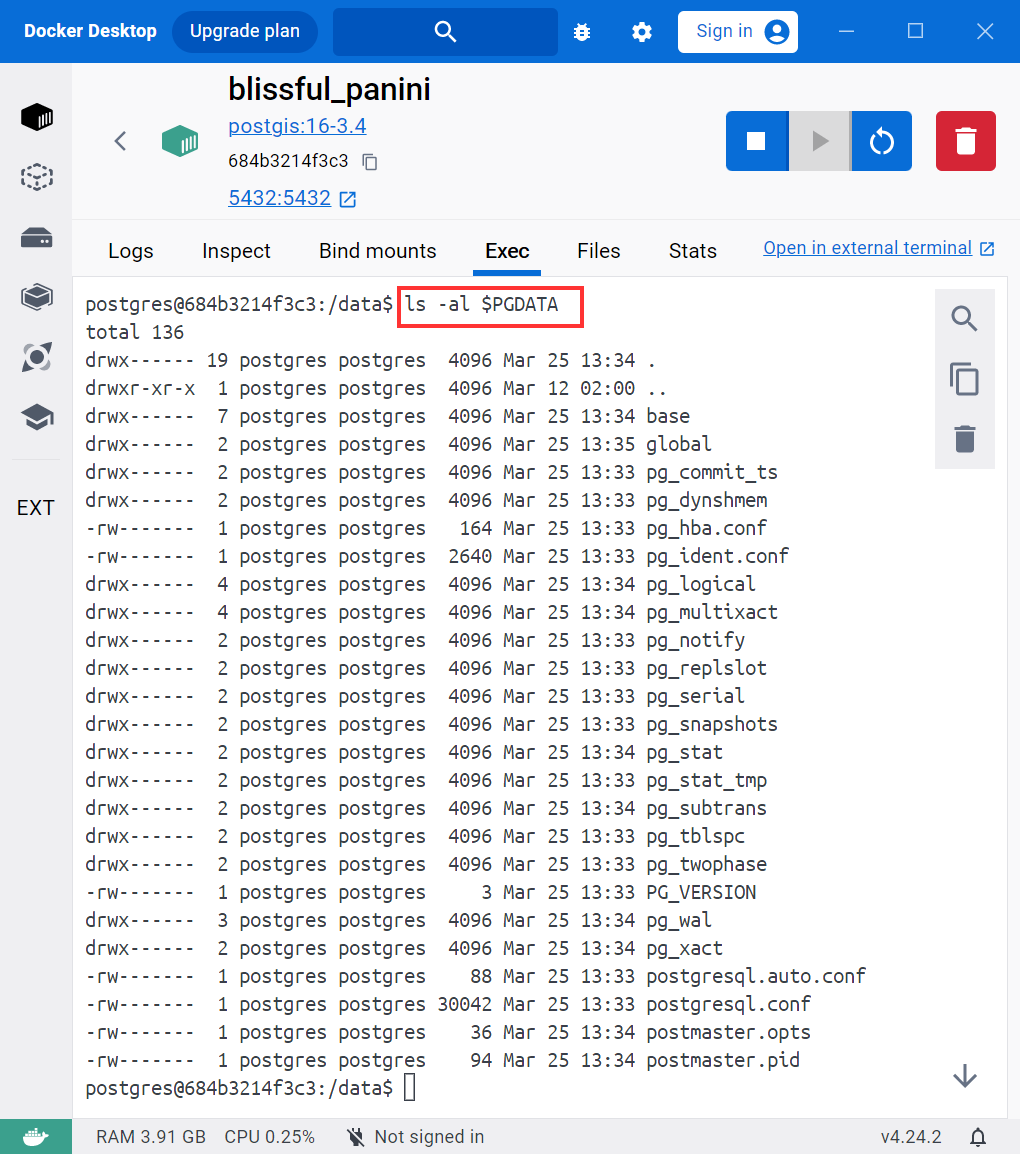

then it works as expected and the prefilled PostgreSQL database is there in the container launched in Docker Desktop:

But when I build the same Dockerfile by an Azure pipeline:

- task: AzureCLI@2

displayName: Build and push PostGIS image

inputs:

azureSubscription: $(ArmConnection)

scriptType: bash

scriptLocation: inlineScript

inlineScript: |

image_tag='$(ContainerRegistry)/postgis-${{ parameters.OsmRegion }}:${{ parameters.PostGisTag }}'

# delete old images to avoid the error "no space left on device"

docker system prune --all --force

docker build --file $(Build.SourcesDirectory)/src/Services/PostGIS/Dockerfile --no-cache --tag $image_tag --build-arg POSTGIS_TAG=${{parameters.PostGisTag}} --build-arg OSM_PASSWORD=$(OsmPassword) --build-arg DOWNLOAD_URLS="$(DownloadUrls)" $(Build.SourcesDirectory)/src/

# building the Docker image takes longer time, so be sure to acr login just before pushing the image

az acr login -n $(ContainerRegistry)

docker push $image_tag

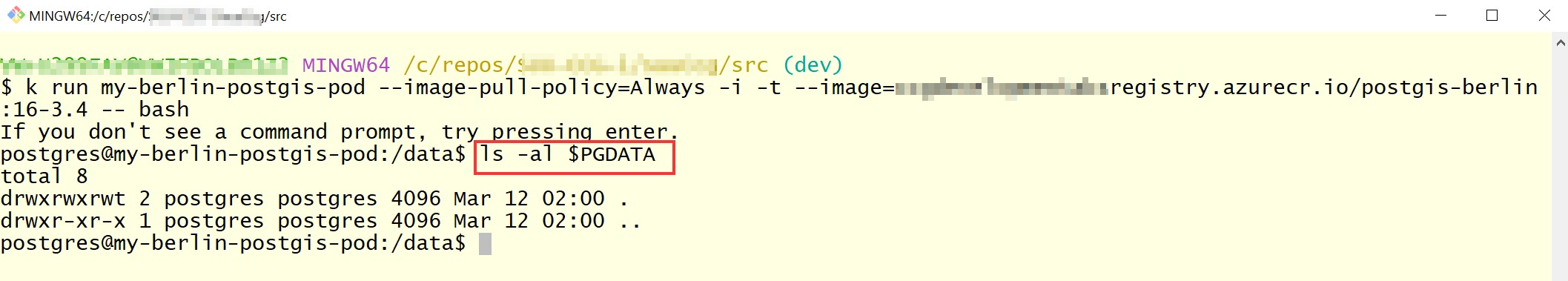

and then pull the built image from ACR and launch it at Kubernetes, then the database folder is empty:

I understand that it is probably not a recommended practice to launch and stop a service during the "docker build".

The reason I am not populating the database by running a script in /docker-entrypoint-initdb.d folder is that in production we use the 28 GB big europe-latest.osm.pbf (and not the small Berlin file) and thus launching a pod would take several hours.

Below is my Dockerfile, I have created it by looking at the https://github.com/postgis/docker-postgis/blob/master/Dockerfile.alpine.template

ARG POSTGIS_TAG

FROM postgis/postgis:$POSTGIS_TAG

EXPOSE 5432

# Install ps, top, netstat, curl, wget, osmium, osm2pgsql

RUN apt-get update &&

apt-get upgrade -y &&

apt install -y procps net-tools curl wget osmium-tool osm2pgsql

WORKDIR /data

RUN chown -R postgres:postgres /data

USER postgres

# Download one or multiple OSM files

ARG DOWNLOAD_URLS

RUN wget --no-verbose $DOWNLOAD_URLS

# Print summaries of the downloaded OSM files

RUN for f in *.osm.pbf; do osmium fileinfo -e "$f"; done

# Merge one or multiple files into a new file map.osm.pbf

RUN osmium merge *.osm.pbf -o map.osm.pbf

# Remove all files except the map.osm.pbf

RUN find . -type f -name '*.osm.pbf' | grep -v 'map.osm.pbf' | xargs rm -f

ARG OSM_PASSWORD

ENV PGPASSWORD=$OSM_PASSWORD

ENV PGUSER=osm_user

ENV PGDATABASE=osm_database

ENV PGDATA=/var/lib/postgresql/data

# create PostgreSQL instance in the $PGDATA folder

# configure PostgreSQL as recommended by osm2pgsql doc

# start PostgreSQL and create database and user

# load data from the map.osm.pbf file into the database

# remove the map.osm.pbf file and stop PostgreSQL

# configure password based access for osm_user

RUN set -eux &&

pg_ctl init &&

echo "shared_buffers = 1GB" >> $PGDATA/postgresql.conf &&

echo "work_mem = 50MB" >> $PGDATA/postgresql.conf &&

echo "maintenance_work_mem = 10GB" >> $PGDATA/postgresql.conf &&

echo "autovacuum_work_mem = 2GB" >> $PGDATA/postgresql.conf &&

echo "wal_level = minimal" >> $PGDATA/postgresql.conf &&

echo "checkpoint_timeout = 60min" >> $PGDATA/postgresql.conf &&

echo "max_wal_size = 10GB" >> $PGDATA/postgresql.conf &&

echo "checkpoint_completion_target = 0.9" >> $PGDATA/postgresql.conf &&

echo "max_wal_senders = 0" >> $PGDATA/postgresql.conf &&

echo "random_page_cost = 1.0" >> $PGDATA/postgresql.conf &&

echo "password_encryption = scram-sha-256" >> $PGDATA/postgresql.conf &&

pg_ctl start &&

createuser --username=postgres $PGUSER &&

createdb --username=postgres --encoding=UTF8 --owner=$PGUSER $PGDATABASE &&

psql --username=postgres $PGDATABASE --command="ALTER USER $PGUSER WITH PASSWORD '$PGPASSWORD';" &&

psql --username=postgres $PGDATABASE --command='CREATE EXTENSION IF NOT EXISTS postgis;' &&

psql --username=postgres $PGDATABASE --command='CREATE EXTENSION IF NOT EXISTS hstore;' &&

osm2pgsql --username=$PGUSER --database=$PGDATABASE --create --cache=60000 --hstore --latlong /data/map.osm.pbf &&

rm -f /data/map.osm.pbf &&

pg_ctl stop &&

echo '# TYPE DATABASE USER ADDRESS METHOD' > $PGDATA/pg_hba.conf &&

echo "local all postgres peer" >> $PGDATA/pg_hba.conf &&

echo "local $PGDATABASE $PGUSER scram-sha-256" >> $PGDATA/pg_hba.conf &&

echo "host $PGDATABASE $PGUSER 0.0.0.0/0 scram-sha-256" >> $PGDATA/pg_hba.conf

I have also asked the same question at docker-postgis Github discussions.

2

Answers

I have found a solution for the empty

/var/lib/postgresql/datafolder, when building apostgis/postgis:16.3based Docker image with prefilled OSM database and would like to share it here -After changing just a single line my Dockerfile has built successfully:

The reason seems to be that the parent official postgres Dockerfile contains this conflicting line:

and thus the folder I was trying to use originally was cleared.

Below is my working Dockerfile (and yes I understand that the built image is too big, especially with Europe data and thus I have to improve it further and possibly use

VOLUMEor some Azure storage there):Example build command:

Checked with same Dockerfile with

Ubuntu-latestagent on my side, it will reportsOut of memoryerror:After i reduce

60000to10000for the command in Dockerfile, the pipeline is working fine on my side, the output ofRUN ls -al $PGDATAis correct.The Dockerfile:

The pipeline yaml(

to avoid any value missing, i defined the parameters as variables).The output in pipeline: