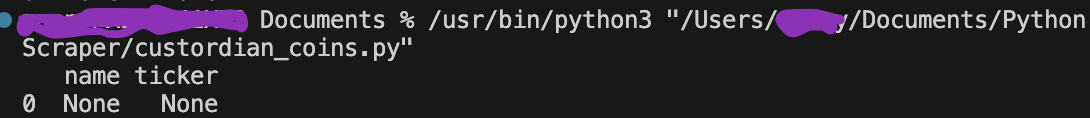

Wrote a Python script to scrape this website https://lumiwallet.com/assets/ for all of its asset listings. I’ve managed to get the name of the 1st coin "Bitcoin" but no ticker. There are 27 pages on the website with 40 assets per page my intention is I would like to scrape names & tickers for all assets on all 27 pages then turn it to a pandas data frame then to a csv. file with a column for names and a column for tickers. I think the solution would be to write a for loop that iterates through the name tags also looking to get the asset ticker but only getting the name, but because I’m an amateur I not sure where to put the for loop is that’s the solution or maybe that’s not the solution. I’ve included an image of my output.

”’

from urllib import response

from webbrowser import get

import requests

from bs4 import BeautifulSoup

import csv

from csv import writer

from csv import reader

from urllib.parse import urlparse

import pandas as pd

from urllib.parse import urlencode

API_KEY = '7bbcbb39-029f-4075-97bc-6b57b6e9e68b'

def get_scrapeops_url(url):

payload = {'api_key': API_KEY, 'url': url}

proxy_url = 'https://proxy.scrapeops.io/v1/?' + urlencode(payload)

return proxy_url

r = requests.get(get_scrapeops_url('https://lumiwallet.com/assets/'))

response = r.text

#list to store scraped data

data = []

soup = BeautifulSoup(response,'html.parser')

result = soup.find('div',class_ = 'assets-list__items')

# parse through the website's html

name = soup.find('div',class_ = 'asset-item__name')

ticker = soup.find('div',class__ = 'asset-item__short-name')

#Store data in a dictionary using key value pairs

d = {'name':name.text if name else None,'ticker':ticker.text if ticker else None}

data.append(d)

#convert to a pandas df

data_df = pd.DataFrame(data)

data_df.to_csv("coins_scrape_lumi.csv", index=False)

print(data_df)

”’

2

Answers

Why you are using "proxy_scapes", if you may parsing native url? You gets HTML.

In your code add second ‘_’, for get ticker

For get all tasks, you may using find all and cycle

For getting other pages, you may simulate clicking, bs4 doesn’t have this function, and you need selenium, or tkinter. or other libraries.

Because each page of listings on this site is contained within the same URL, we can’t get each one by name directly, so I’m opting to use Selenium browser automation to handle the page clicking. This script will take you to the webpage, scrape the first page, then loop through a series of clicking and scraping 26 times. 27 total pages are scraped, and 26 clicks are made to the next page. A list of the text contents are concatenated, then string splitting is utilized to separate the listing names and the tickers, which are separate by a newline character "n". Finally, those are turned into a pandas dataframe which is exported to CSV, in "write" mode, so it will overwrite previous copies of that file whenever the script is executed.

The only thing you should need to do before it will work as intended, is to make sure you have selenium installed and updated, along with a version of chromedriver that matches your Google Chrome browser version. Then, you will need to replace text in this line, because my chromedriver path won’t work for you:

driver = webdriver.Chrome(r"YOUR CHROMEDRIVER PATH HERE", options=options)And point the webdriver to the location of chromedriver on your machine.Let me know if you have any questions about this. Cheers!