I have two UIImages – let’s call them base and overhead. Their size is the same.

I need a UIImage that is made like this: when overhead’s pixel is solid or semi-transparent, use clear pixel. When overhead’s pixel is fully transparent, use the base’s pixel.

I do see that there are solutions for the opposite effect – clear when overhead’s pixels are transparent, base otherwise. What is the best way to combine images this way?

The result can be made while base is still generated (with UIGraphicsImageRenderer, using Context operations) – separate base is not needed.

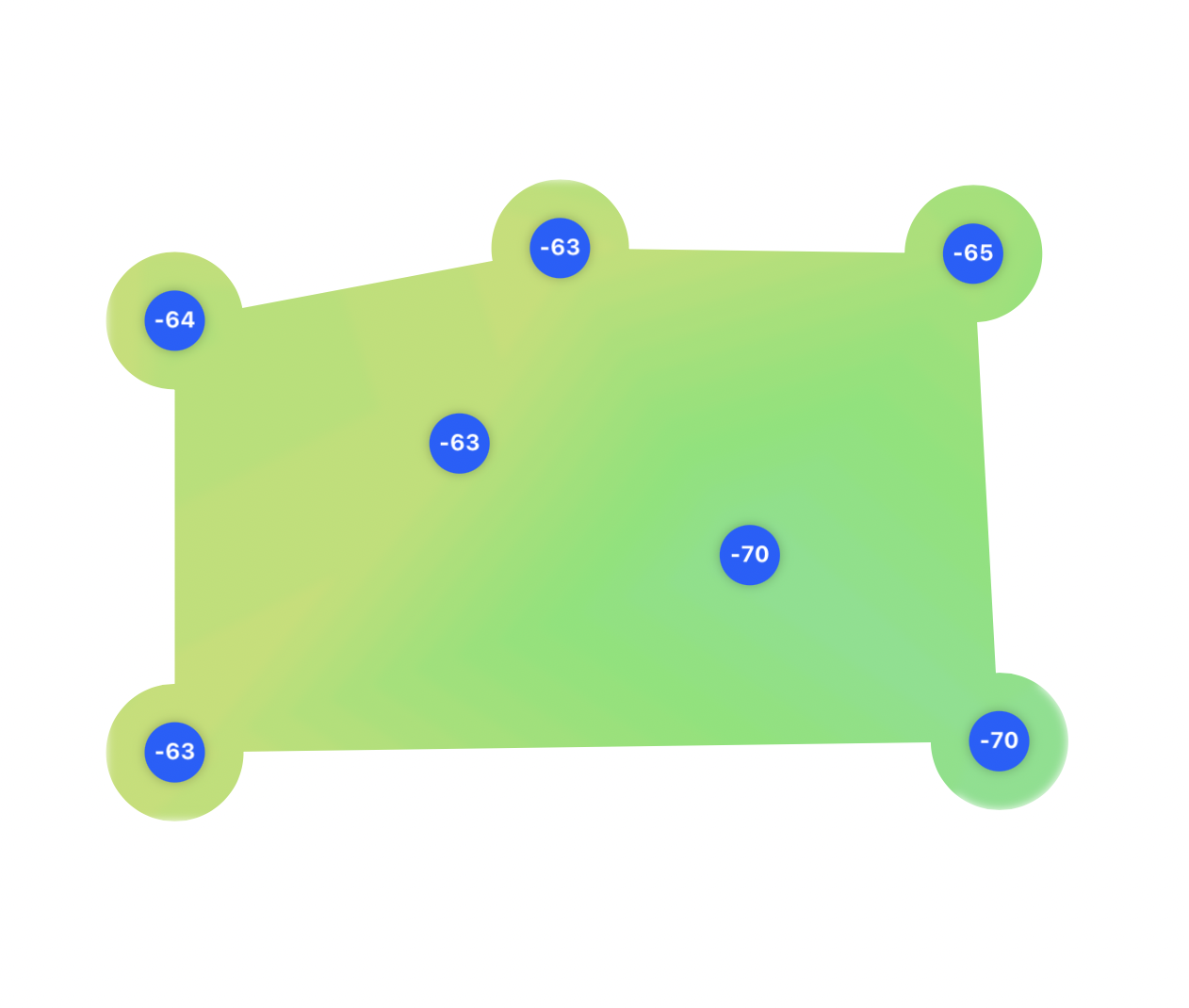

Overhead (the transparency looks grey here, sorry)

base with overhead drawn on top of it (some parts are transparent, some are not)

2

Answers

Found a way to do this using mask invertion from this question UIView invert mask MaskView. After combining this with CIFilter.blendWithAlphaMask(), the desired result was made. But is this the most efficient way?

You can do this with

UIGraphicsImageRendererand make it a bit easier than usingCIFilter…You said your "base" and "overhead" images will be the same size, so I grabbed the images you posted and made the gray circles transparent.

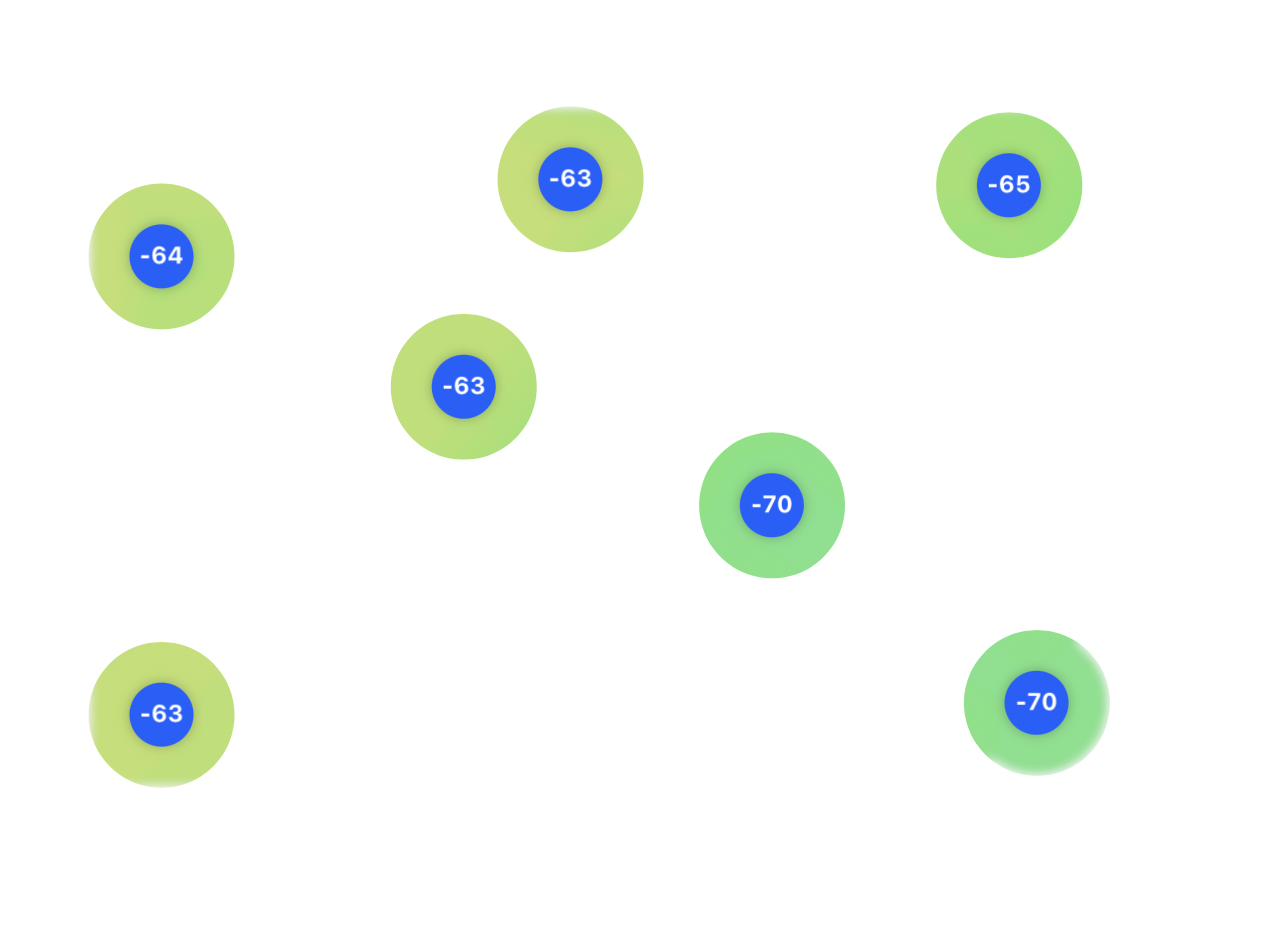

Base Image (white is opaque white, although it doesn’t really matter):

Overhead Image (white is transparent):

We can use this extension:

and call it like this:

Here’s a quick example:

Gives this output:

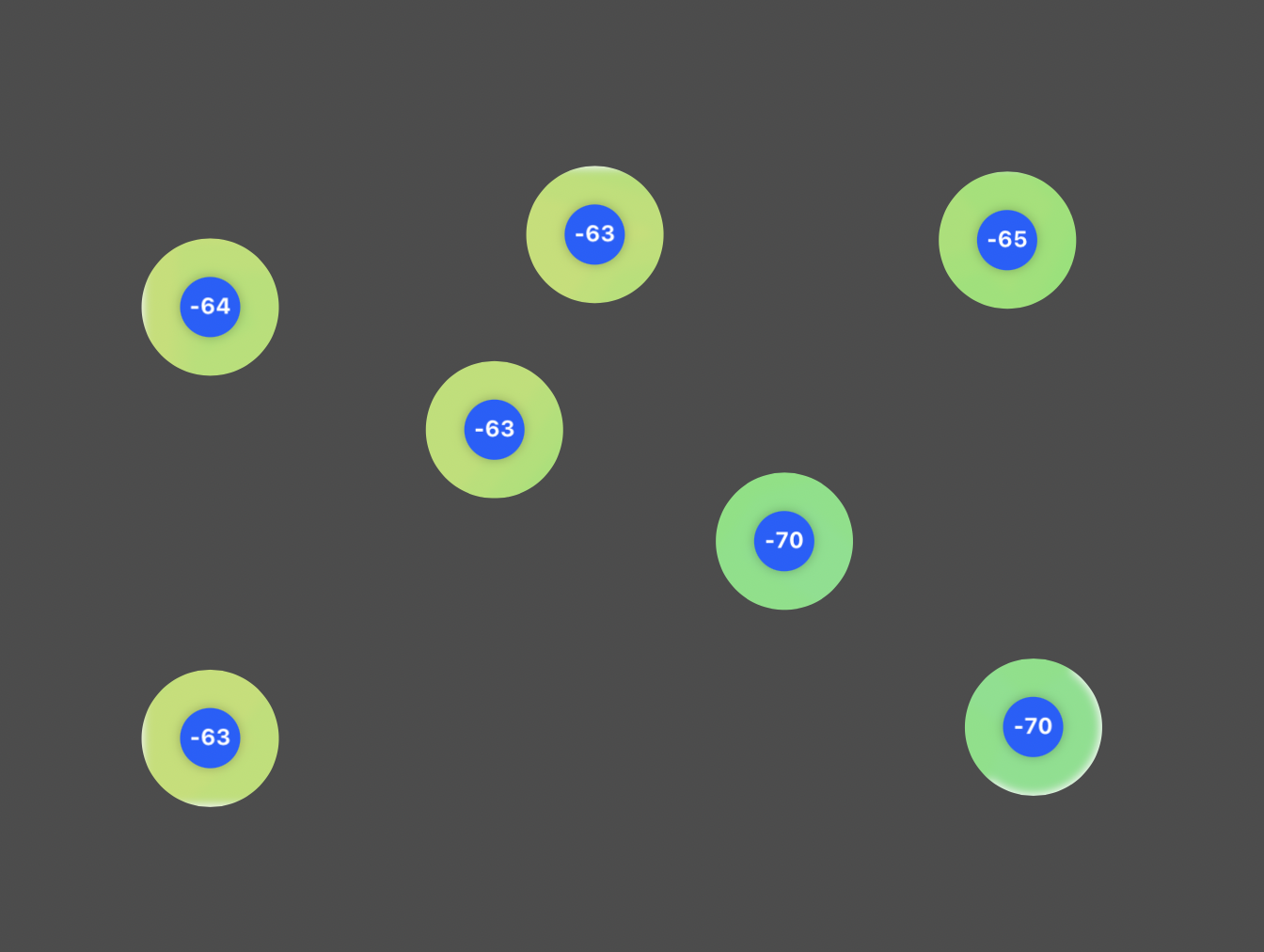

baseImageoverheadImagemaskedImageImage views all have a red background, so we can see the frames (and see the transparency).