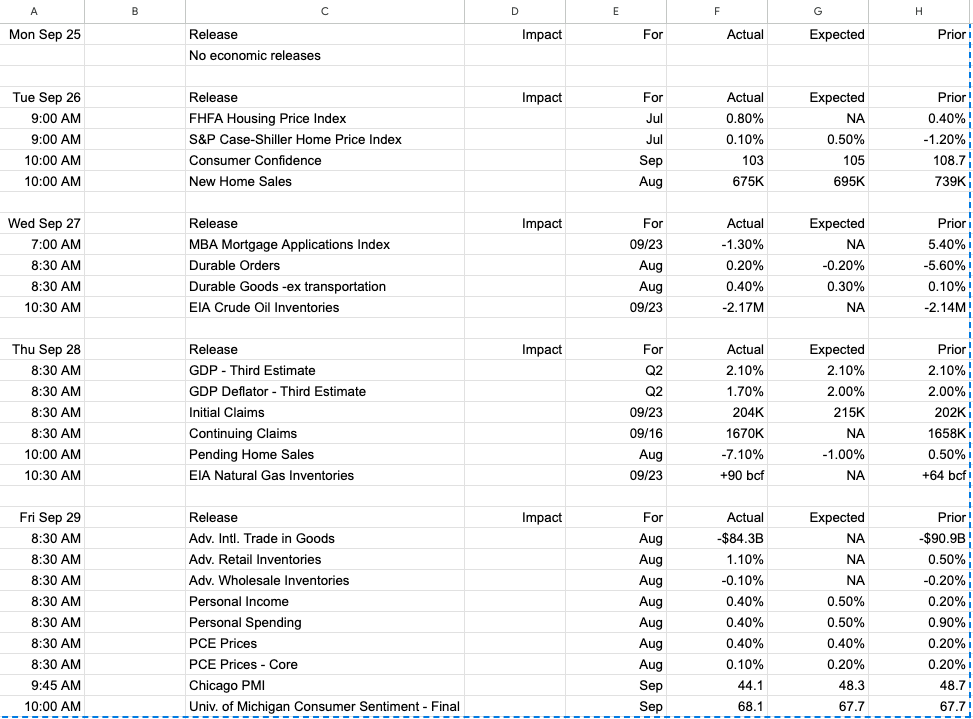

I want to download the whole table data from this webpage. It has five sub tables having its own thead and tbody each, under ‘table[class="calendar"]’. My codes below can pull all thead texts and tbody texts as whole, but doesn’t group them by each sub table.

I want to pull thead texts and tbody texts from each sub tables and then combine them to one table so that I can have the whole organized table data just in the same arrangement as the webpage shows. How can I do that? Thank you!

function test() {

const url = "https://finviz.com/calendar.ashx";

const res = UrlFetchApp.fetch(url, {

muteHttpExceptions: true,

}).getContentText();

const $ = Cheerio.load(res);

var thead = $("thead")

.find("th")

.toArray()

.map(el => $(el).text());

var tableHead = [],

column = 9;

while (thead.length) tableHead.push(thead.splice(0, column)); //Convert 1D array to 2D array

console.log(tableHead);

var tcontents = $("body > div.content")

.find("td")

.toArray()

.map(el => $(el).text());

var idx = tcontents.indexOf("No economic releases");

if (idx) tcontents.splice(idx + 1, 0, "", "", "", "", "", ""); // Add empty elemets to match number of table columns

var tableContents = [],

column = 9;

while (tcontents.length)

tableContents.push(tcontents.splice(0, column)); //Convert 1D array to 2D array

tableContents.pop(); // remove last empty array

console.log(tableContents);

}

2

Answers

I figured out a solution as below. I'm using Google Apps Script.

I don’t see any sub tables (tables inside of other tables) here, nor do I see

'table[class="calendar"]'. In general, avoid[class=...]syntax since it’s ultra-rigid and fails if there are other classes present, or the classes are in a different order. Prefertable.calendar.Try using nesting in your scraping to preserve the structure.

whileandspliceare slidelines, not usually used for much of anything.mapis the primary array transformation tool.