I am handling stream response which content-type is text/event-stream with fetch, reader and decoder, however it seems that the reader returns after all chunk received, this is not as excepted since I thought reader will return when chunk received as soon as possible. Do I handle stream response in a wrong way?

const sseController = new AbortController();

fetch(url, {

method: 'POST',

mode: 'cors',

credentials: 'include',

headers: {

Accept: 'text/event-stream,application/json',

'Content-Type': 'application/json',

},

body: JSON.stringify({

...params,

}),

signal: sseController.signal,

}).then(async (response) => {

console.log('sselog::: open', new Date());

if (!response.ok || !response.body) {

// TODO onerror

return;

}

const reader = response.body.getReader();

const decoder = new TextDecoder('utf-8');

while (true) {

const { done, value } = await reader?.read();

console.log('sselog::: while', value);

const str = new TextDecoder().decode(value);

// TODO onmessage

if (done) {

return;

}

}

});

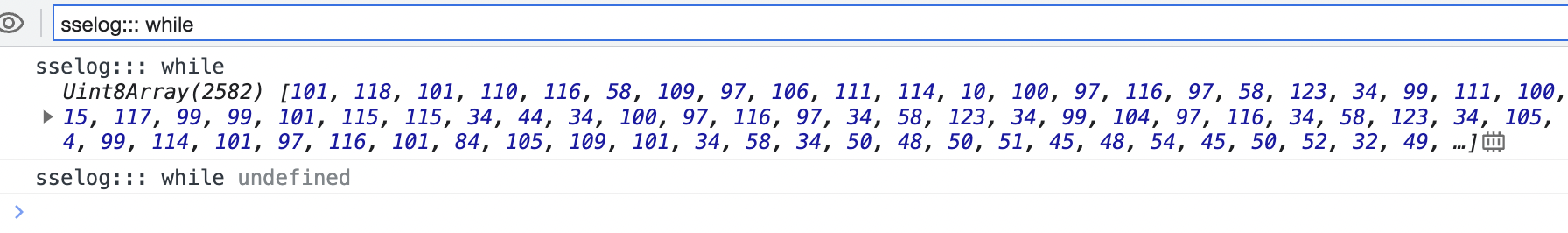

You can see that sselog::: while only output once, but actually backend received two messages which type is major and message

2

Answers

I figure out finally. Set server compression to false.

I guess the problem isn’t your fetch code but in the backend. Your HTTP server shouldn’t buffer and must issue data immediately.

Here’s the test with chunked fetch:

Display text from a stream XHR response chunk by chunk