I have a huge dataset on two tables (users, pos_transactions), and I need to execute a join on them.

I wrote the query both with join and subquery approches. Both looks Non-optimal:

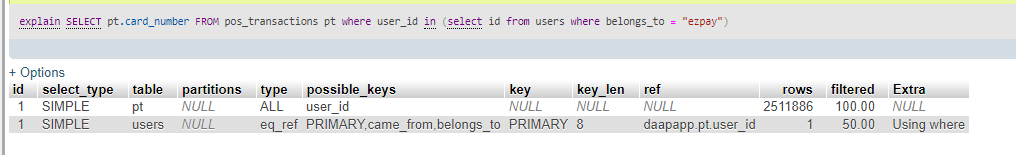

explain SELECT pt.card_number

FROM pos_transactions pt

where user_id in (select id from users where belongs_to = "ezpay")

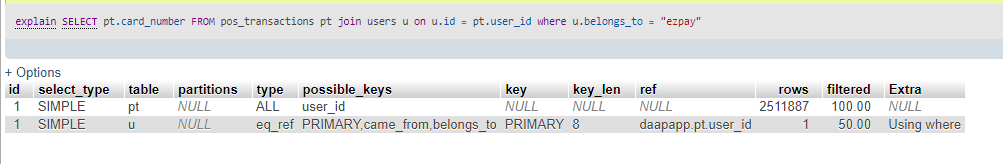

explain SELECT pt.card_number

FROM pos_transactions pt

join users u on u.id = pt.user_id

where u.belongs_to = "ezpay"

See? In both cases over 2M rows will be scanned. Noted that I have these two indexes:

- `users(belongs_to)`

- `pos_transactions(user_id)`

Also, I have tried force index() for both indexes on both queries, but still it does full scan. Any idea how can I get the result in an optimal way?

Question posted in

Question posted in

2

Answers

you do not write properly your query because string must not use double quote but single quote. Double quote is reserved for SQL identifiers like COLUMN’s name…

to use and index for a big table, you must have all the columns used by the table, not aonly the seeked ones…

Do you have the following indexes :

?

For whatever reason, it seems to think it shouldn’t start with reading users, and that’s going to get you the best plan possible (unless your query is going to end up selecting a large percentage of users and/or pos_transactions rows). You can force it with straight_join:

The only possible further improvement would be having a (user_id,card_number) covering index on pos_transactions, but that’s not likely to make a huge difference.