I have a very simple mysql query join for storing records of instagram profiles and their posts.

profiles table = 2500 records (PK = p.id)

post_files table = 800,000 records (PK = pf.id)

SELECT

p.id,

p.instagram_id,

pf.filename,

p.last_updated_on,

count(pf.id) as total_images

FROM

profiles p JOIN post_files pf ON p.instagram_id = pf.instagram_id

WHERE

pf.filetype = 'photo'

group by

p.id

order by

p.last_updated_on ASC

LIMIT 0,200;

I even have single column indexes added on columns:

- pf.filetype

- p.last_updated_on

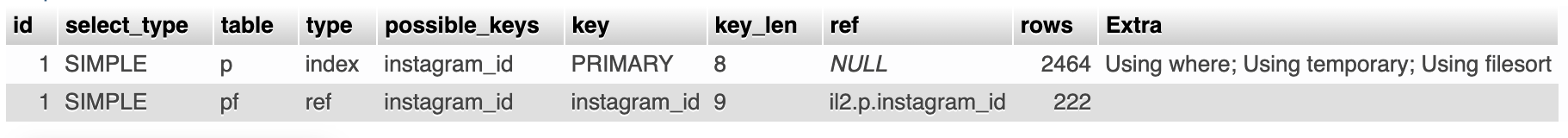

Using the EXPLAIN syntax on the query yields the following result:

The query is so basic with a single table inner join but takes about 30 seconds to run. The database has the potential to grow to 10 million records but even at 800K records, it is unusable. Makes me think I am missing something very fundamental in a basic table join with sort.

What can I do to make this run faster?

2

Answers

I would suggest try removing group by from the query if ID is unique. Also, check the buffer size in Mysql configuration.

Your query is invalid. So before talking about performance, you should get the query fixed. You group by

profiles.id, but you selectpost_files.filename. Which? There may be thousands of file names linked to a profile. The DBMS should raise an error here, but you say you can run it. That means that you run in MySQL’s notorious cheat mode that allows invalid aggregation queries. In early MySQL versions this cheat mode was even the default, which either means you are running an old MySQL version or you changed the default to cheat mode. You shouldn’t do either. In MySQL, always work in full group by mode:Then you are joining on an ID that is not unique in either of the two tables, if I understand you correctly. This means your join can end up with 0 to 2,500 x 800,000 = 2,000,000,000 result rows. (0 with no ID matching, 2 billions with all IDs maching, i.e. all IDs being the same. Or any number between.) The only condition for limiting this result is the filetype, which you want to be "photo", but well, as this is about Instagram, this may not be very limiting. In the worst case, the DBMS tries to sort 2 billions of joined rows.

Let us see, what the DBMS is supposed to do. I am illustrating the approach I might take, the DBMS may choose another approach to get to the same result.

The first question is: Is this really what you want? Show all Instagram photos of the latest updated profile and then maybe some more? This looks like a weird task to me.

If you want this, then we can help the DBMS to find the latest updated profiles quickly and get their Instagram IDs for the join:

And then we want to find the Instragram’s photo files quickly. We come with an Instagram ID and want to know the file type:

If that doesn’t help, then this may be one of the rare cases where using a loop in your app and running separate queries (one per lately updated profile) may be beneficial.