in Azure databricks, need to connect to a table in odbc sql server and create a dataframe and create exactly the same table in databricks

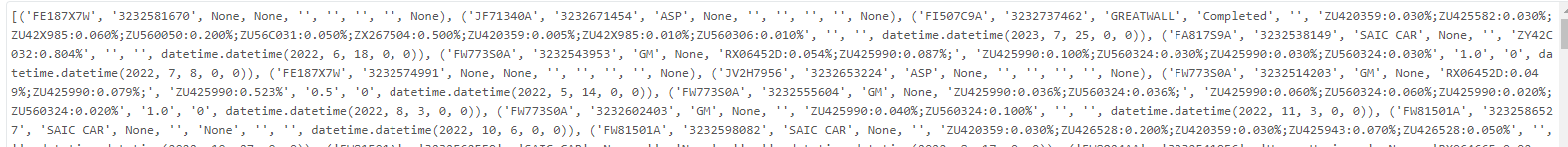

I have already completed the below codes and I can see as attached. How to get the structured table, create the dataframe with the same info?

Could you please help with the scripts?

Below are what i have now:

pip install pyodbc

import pyodbc

server = 'XXXXXX-prod-db.database.chinacloudapi.cn'

database = 'database-ods'

username = 'os_reader'

password = 'P@ssword'

table = 'table_record'

cnxn = pyodbc.connect(

f'DRIVER={{ODBC Driver 17 for SQL Server}};'

f'SERVER={server};'

f'DATABASE={database};'

f'UID={username};'

f'PWD={password};'

f'SELECT INTO={table}'

)

cursor = cnxn.cursor()

query = "SELECT * FROM " + table

cursor.execute(query)

rows = cursor.fetchall()

print(rows)

2

Answers

You can follow this documentation for getting data.

In this, there are 2 options to get data:

sqlserver.JDBC driver.However, if you want to use ODBC itself, as @Anupam Chand mentioned, you will not get the column names.

But, you can achieve this using pandas:

Here, get data into a pandas dataframe and convert it into a spark dataframe.

pd.read_sql_query("<query>", <connection_object>)Output:You’ve tagged MySQL and title says SQL Server. If it’s MySQL or SQL Server, they both support JDBC.

Spark supports JDBC as a data source.

TL;DR

While running it you need ensure driver is installed on your cluster somehow. E.g.