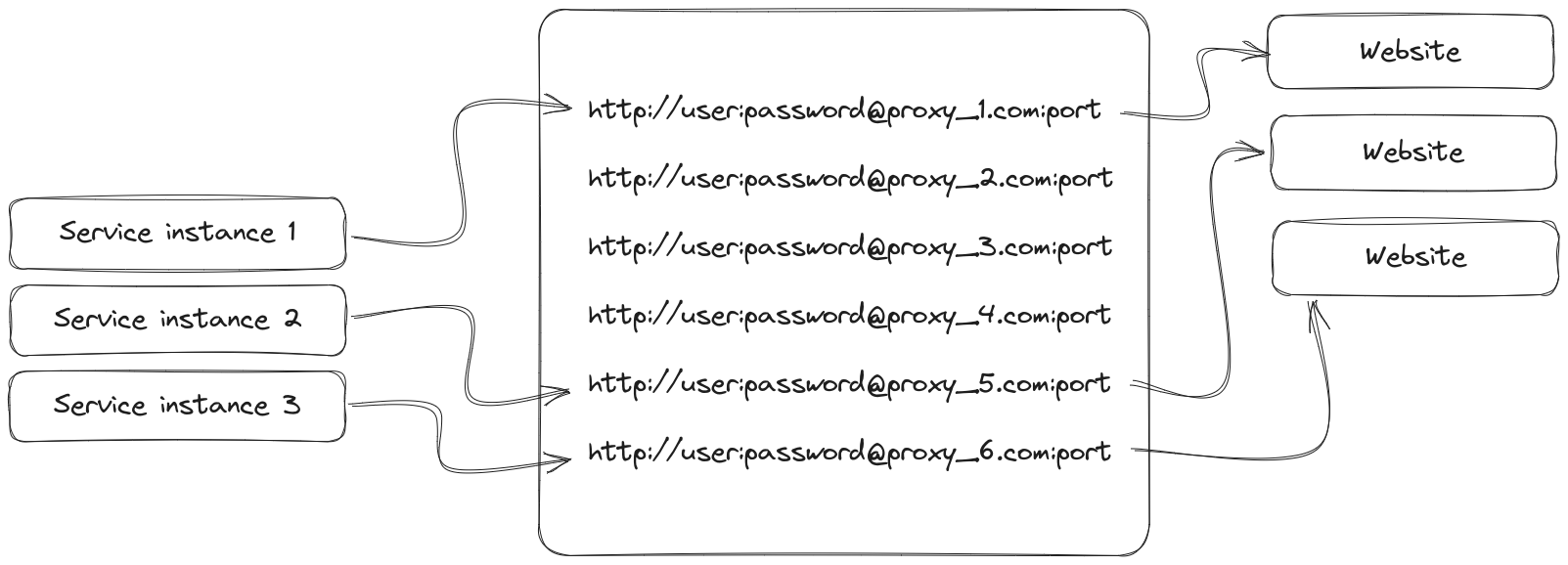

I’ve written a backend service which periodically connects to websites and collects some data. This service supports the usage of proxy servers for sending it’s requests.

Right now this service has a predefined (hardcoded) pool of proxies. For each request it selects a random proxy from the pool and uses it. The service is scalable and having multiple instances running at the same time.

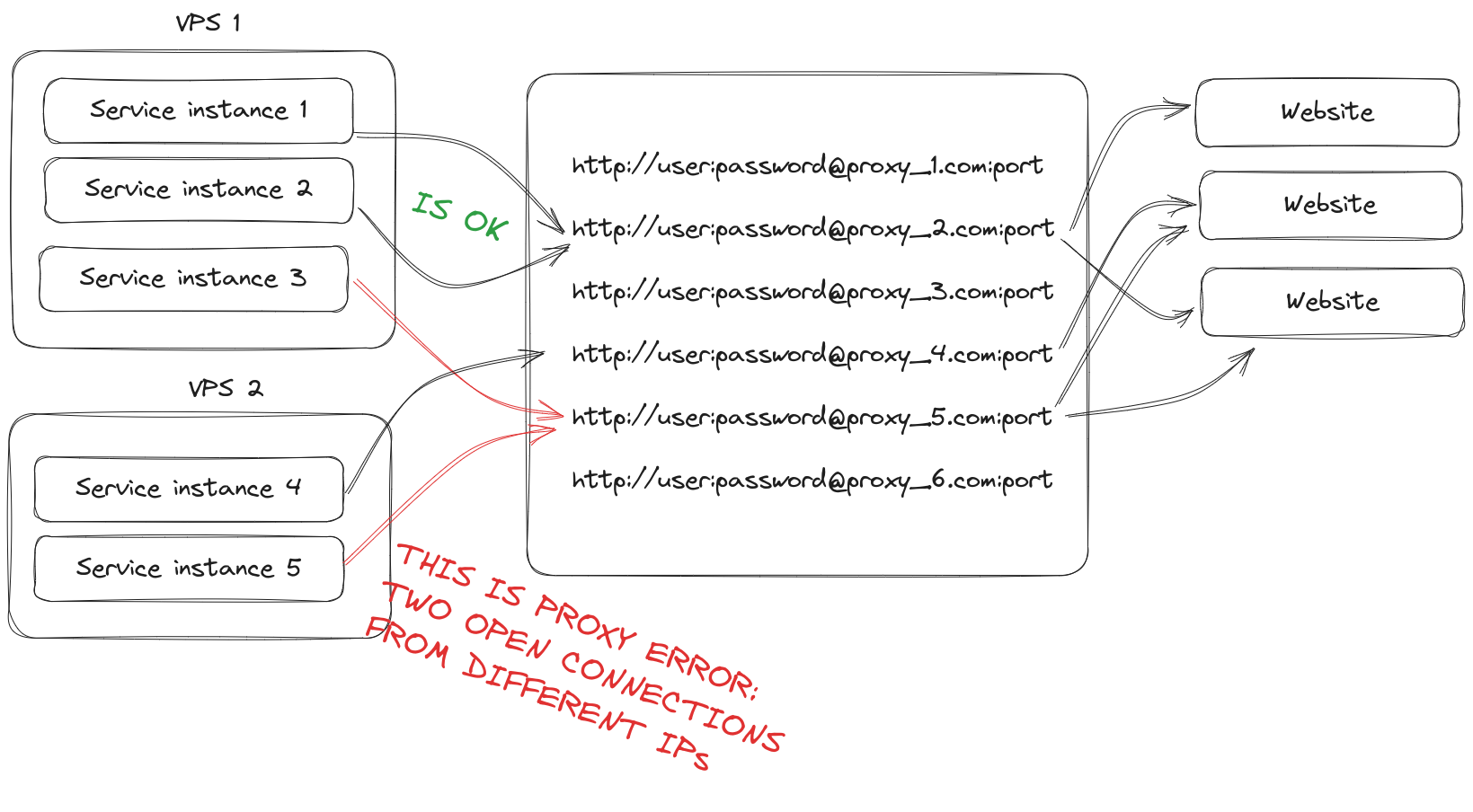

The proxies I am using do not have limitation on amount of the concurrent connections. However, if multiple connections are open with same proxy server, they should come from the same IP address. This is not a problem while all service instances are running on the same machine, but I am thinking about buying an extra VPS to run more instances there.

Updated architecture will look like this:

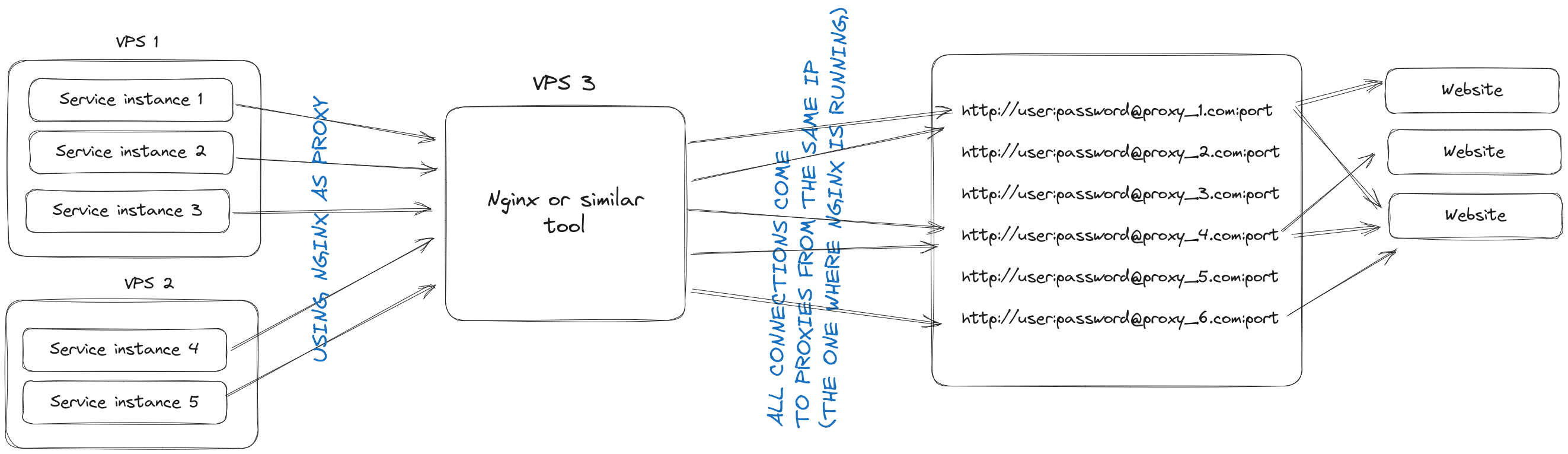

I’m thinking if there is a possibility to put Nginx or a similar tool in front of my proxy pool, which will play the role of proxy itself. Something like this:

Could you please help me to understand, what would be the most efficient way to implement this use case?

2

Answers

I did my very-very best trying to apply the solution from @VonC's answer, played with headers configuration a lot, but unfortunately it never worked for me, the proxy server was always returning an error with

400status code:Bad Request.The solution which actually worked for me was as simple as this:

So instead of using a

httpmodule I usedstreammodule, which is actually installed with Nginx server by default (didn't need to build it separately). This way I also don't need to add any additional header configurations.Using this approach I also don't need to store the credentials for proxies on my Nginx server. When service is using Nginx server as proxy, it passes the credentials as if they were meant for my Nginx proxy, and Nginx is redirecting the request with given credentials to the actual proxy.

With this set up the whole flow looks like this:

My Service---login/password--->Nginx Proxy---same (redirected) login/password--->Random proxy from proxy_pool--->WebsiteAs a result, the proxy servers "think" that all requests are originating from the IP of my Nginx server, even if they were originally sent from different instances of my VPSs. This way I was able to walk around of the proxy limitations.

A simple NGiNX configuration like this one should be enough:

That would forward requests received by Nginx to one of the proxies in the defined pool, depending on the load balancing algorithm.

The backend services on the VPS instances should be configured to use the Nginx server as their proxy for outgoing requests.

That approach ensures that all requests from the VPS instances are funneled through the centralized Nginx server and then forwarded to the backend websites via the existing proxy pool, maintaining the constraint that connections to the same proxy server come from the same IP address (the Nginx server’s IP).

However: Sticky sessions, typically implemented through the

ip_hashdirective in Nginx, as seen here, route requests from the same client IP to the same backend server consistently. It is a client-centric approach, ensuring that a client’s requests are always handled by the same server.In your scenario, the "clients" connecting to the Nginx proxy are not individual end-users but rather the instances of your backend service running on various VPSs. These instances are making requests to the backend websites through the proxy pool.

You have specified that if multiple connections are open with the same proxy server, they should come from the same IP address. The challenge here is to ensure that all connections to a specific proxy server within your pool come from the same VPS instance, not just the same client IP.

Implementing sticky sessions in Nginx would ensure that all requests from a specific VPS instance always go to the same proxy server. However, it does not prevent other VPS instances from also being routed to that same proxy server, which might violate your constraint of having all connections to the same proxy server come from the same VPS instance.

In this specific situation, you might need a more tailored solution to coordinate among the VPS instances and determine which proxy server each should use, depending on the connection requirements. Coordination mechanisms like a shared database or a dedicated coordination service could be considered to ensure that all VPS instances adhere to your specific requirements regarding proxy usage.