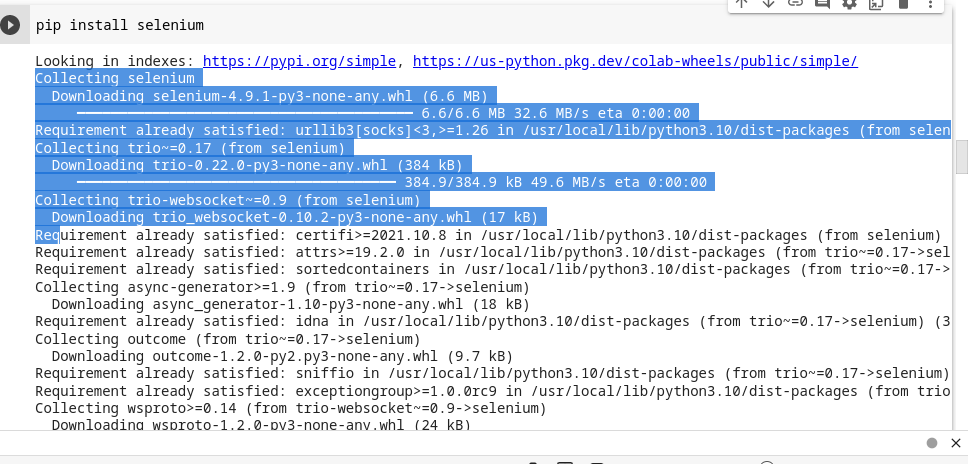

update: what bout selenium – support in colab: i have checked this..see below!

good day dear experts – well at the moment i am trying to figure out a simple way and method to obtain data from clutch.io

note: i work with google colab – and sometimes i think that some approches were not supported on my collab account – some due cloudflare-things and issues.

but see this one –

import requests

from bs4 import BeautifulSoup

url = 'https://clutch.co/it-services/msp'

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

links = []

for l in soup.find_all('li',class_='website-link website-link-a'):

results = (l.a.get('href'))

links.append(results)

print(links)

this also do not work – do you have any idea – how to solve the issue

it gives back a empty result.

update: hello dear user510170 . many thanks for the answer and the selenium solution – tried it out in google.colab and found these following results

--------------------------------------------------------------------------

WebDriverException Traceback (most recent call last)

<ipython-input-2-4f37092106f4> in <cell line: 4>()

2 from selenium import webdriver

3

----> 4 driver = webdriver.Chrome()

5

6 url = 'https://clutch.co/it-services/msp'

5 frames

/usr/local/lib/python3.10/dist-packages/selenium/webdriver/remote/errorhandler.py in check_response(self, response)

243 alert_text = value["alert"].get("text")

244 raise exception_class(message, screen, stacktrace, alert_text) # type: ignore[call-arg] # mypy is not smart enough here

--> 245 raise exception_class(message, screen, stacktrace)

WebDriverException: Message: unknown error: cannot find Chrome binary

Stacktrace:

#0 0x56199267a4e3 <unknown>

#1 0x5619923a9c76 <unknown>

#2 0x5619923d0757 <unknown>

#3 0x5619923cf029 <unknown>

#4 0x56199240dccc <unknown>

#5 0x56199240d47f <unknown>

#6 0x561992404de3 <unknown>

#7 0x5619923da2dd <unknown>

#8 0x5619923db34e <unknown>

#9 0x56199263a3e4 <unknown>

#10 0x56199263e3d7 <unknown>

#11 0x561992648b20 <unknown>

#12 0x56199263f023 <unknown>

#13 0x56199260d1aa <unknown>

#14 0x5619926636b8 <unknown>

#15 0x561992663847 <unknown>

#16 0x561992673243 <unknown>

#17 0x7efc5583e609 start_thread

to me it seems to have to do with the line 4 – the

----> 4 driver = webdriver.Chrome()

is it this line that needs a minor correction and change!?

update: thanks to tarun i got notice of this workaround here:

https://medium.com/cubemail88/automatically-download-chromedriver-for-selenium-aaf2e3fd9d81

did it: in other words i appied it to google-colab and tried to run the following:

from selenium import webdriver

from webdriver_manager.chrome import ChromeDriverManager

#if __name__ == "__main__":

browser = webdriver.Chrome(ChromeDriverManager().install())

browser.get("https://www.reddit.com/")

browser.quit()

well – finally it should be able to run with this code in colab:

import requests

from bs4 import BeautifulSoup

url = 'https://clutch.co/it-services/msp'

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

links = []

for l in soup.find_all('li',class_='website-link website-link-a'):

results = (l.a.get('href'))

links.append(results)

print(links)

update: see below – the check in colab – and the question – is colab genearlly selenium capable and selenium-ready!?

look forward to hear from you

thanks to @user510170 who has pointed me to another approach :How can we use Selenium Webdriver in colab.research.google.com?

Recently Google collab was upgraded and since Ubuntu 20.04+ no longer distributes chromium-browser outside of a snap package, you can install a compatible version from the Debian buster repository:

Then you can run selenium like this:

from selenium import webdriver

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--no-sandbox')

chrome_options.headless = True

wd = webdriver.Chrome('chromedriver',options=chrome_options)

wd.get("https://www.webite-url.com")

cf this thread How can we use Selenium Webdriver in colab.research.google.com?

i need to try out this…. – on colab

2

Answers

If you do

print(response.content), you will see the following:Enable JavaScript and cookies to continue. Without using JavaScript, you don’t get access to the full content. Here is a working solution based on selenium.Result:

TL;DR

The big guns of

seleniumcan’t shoot the Cloudflare sheriff.The Colab link with what’s below.

All right, here’s a working

seleniumon Google colab that proves my point in the comment that even if you run it, you still must deal with aCloudflarechallenge.Do the following:

You should see this:

As you can see, running

seleniumdoesn’t change much.So, my question to you is:

Why do you want to stick to colab so badly?

Because, running a slightly modified code locally:

Should open a browser window (this time is Edge) and return this:

On the other hand, locally, you don’t even need

seleniumif you havecloudscraper.For example, this:

Should return:

PS. The source for the Debian magic on colab is here.