I’m trying to run and deploy the Dalle Playground on my local machine using an AMD GPU, I’m on Windows 11 with a WSL instance running.

System OS: Windows 11 Pro - Version 21H1 - OS Build 22000.675

WSL Version: WSL 2

WSL Kernel: 5.10.16.3-microsoft-standard-WSL2

WSL OS: Ubuntu 20.04 LTS

GPU: AMD Radeon RX 6600 XT

CPU: AMD Ryzen 5 3600XT (32GB ram)

I have been able to deploy the backend and frontend successfully but it runs off the CPU.

It gives me this warning:

--> Starting DALL-E Server. This might take up to two minutes.

2022-06-12 01:16:33.012306: I external/org_tensorflow/tensorflow/core/tpu/tpu_initializer_helper.cc:259] Libtpu path is: libtpu.so

2022-06-12 01:16:37.581440: I external/org_tensorflow/tensorflow/compiler/xla/service/service.cc:174] XLA service 0x5a4e760 initialized for platform Interpreter (this does not guarantee that XLA will be used). Devices:

2022-06-12 01:16:37.581474: I external/org_tensorflow/tensorflow/compiler/xla/service/service.cc:182] StreamExecutor device (0): Interpreter, <undefined>

2022-06-12 01:16:37.587860: I external/org_tensorflow/tensorflow/compiler/xla/pjrt/tfrt_cpu_pjrt_client.cc:176] TfrtCpuClient created.

2022-06-12 01:16:37.588478: I external/org_tensorflow/tensorflow/stream_executor/tpu/tpu_platform_interface.cc:74] No TPU platform found.

WARNING:absl:No GPU/TPU found, falling back to CPU. (Set TF_CPP_MIN_LOG_LEVEL=0 and rerun for more info.)

I have been trying to make my GPU accessible by my WSL instance but I can’t work out what I’m doing wrong. For use with GPU I’ve entered the following command from pytorch:

pip3 install torch torchvision --extra-index-url https://download.pytorch.org/whl/rocm4.5.2

I confirmed that pytorch is installed correctly by running the sample PyTorch code supplied on their website.

After doing some digging I realised that the rock-dkms package needed to be installed also, so I followed the advice on this website and installed it successfully – after a lot of issues.

When I try to check ROC for my GPU this is what comes up:

$ /opt/rocm/bin/rocminfo

ROCk module is NOT loaded, possibly no GPU devices

$ /opt/rocm/opencl/bin/clinfo

Number of platforms: 1

Platform Profile: FULL_PROFILE

Platform Version: OpenCL 2.1 AMD-APP (3423.0)

Platform Name: AMD Accelerated Parallel Processing

Platform Vendor: Advanced Micro Devices, Inc.

Platform Extensions: cl_khr_icd cl_amd_event_callback

Platform Name: AMD Accelerated Parallel Processing

Number of devices: 0

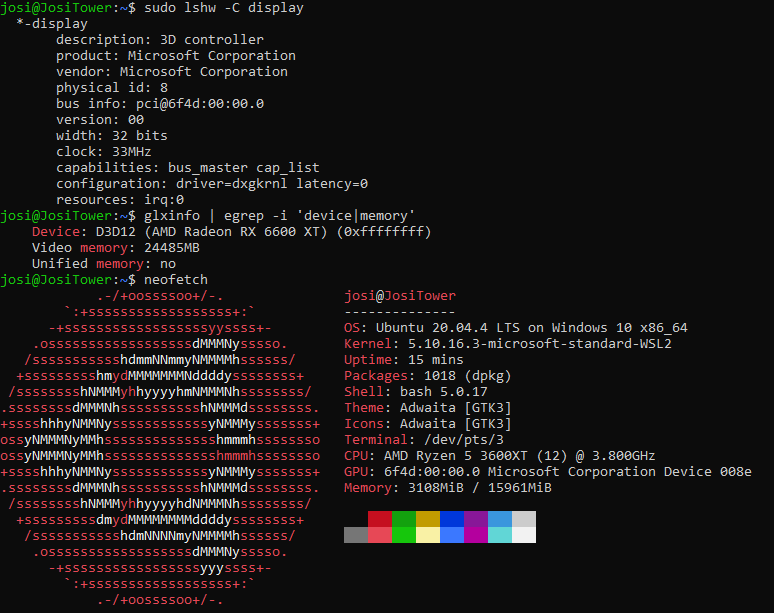

Based on this response, it seems to be there’s definitely some sort of AMD compatible driver available and if you look at the attached photo you can see what shows up when I try to query for the GPU. I don’t know at this stage if WSL can see and/or access my GPU or not, as glxinfo can identify it but nothing else can. (Even if it gets my VRAM wrong)

ANY advice would be very helpful, I know this issue may not be project specific but I tried to include as much information about what I am doing as possible for the best chance of figuring this out.

Installed AMD GPU libs:

$ sudo apt list|grep -i gpu|grep installed

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

libdrm-amdgpu1/focal-updates,focal-security,now 2.4.107-8ubuntu1~20.04.2 amd64 [installed]

libosdgpu3.4.0/focal,now 3.4.0-6build1 amd64 [installed,automatic]

Installed ROC packages:

$ apt list --installed | grep -i roc

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

hsa-rocr-dev/Ubuntu,now 1.5.0.50100-36 amd64 [installed,automatic]

hsa-rocr/Ubuntu,now 1.5.0.50100-36 amd64 [installed,automatic]

hsakmt-roct-dev/Ubuntu,now 20220128.1.7.50100-36 amd64 [installed,automatic]

hsakmt-roct/Ubuntu,now 20210520.3.071986.40301-59 amd64 [installed,automatic]

libopencv-imgproc4.2/focal,now 4.2.0+dfsg-5 amd64 [installed,automatic]

libpostproc55/focal-updates,focal-security,now 7:4.2.7-0ubuntu0.1 amd64 [installed,automatic]

libprocps8/focal-updates,now 2:3.3.16-1ubuntu2.3 amd64 [installed,automatic]

procps/focal-updates,now 2:3.3.16-1ubuntu2.3 amd64 [installed,automatic]

python3-ptyprocess/focal,now 0.6.0-1ubuntu1 all [installed,automatic]

rock-dkms-firmware/Ubuntu,now 1:4.3-59 all [installed,automatic]

rock-dkms/Ubuntu,now 1:4.3-59 all [installed,automatic]

rocm-clang-ocl/Ubuntu,now 0.5.0.50100-36 amd64 [installed,automatic]

rocm-cmake/Ubuntu,now 0.7.2.50100-36 amd64 [installed,automatic]

rocm-core/Ubuntu,now 5.1.0.50100-36 amd64 [installed,automatic]

rocm-dbgapi/Ubuntu,now 0.64.0.50100-36 amd64 [installed,automatic]

rocm-debug-agent/Ubuntu,now 2.0.3.50100-36 amd64 [installed,automatic]

rocm-dev/Ubuntu,now 5.1.0.50100-36 amd64 [installed,automatic]

rocm-device-libs/Ubuntu,now 1.0.0.50100-36 amd64 [installed,automatic]

rocm-dkms/Ubuntu,now 5.1.0.50100-36 amd64 [installed]

rocm-gdb/Ubuntu,now 11.2.50100-36 amd64 [installed,automatic]

rocm-llvm/Ubuntu,now 14.0.0.22114.50100-36 amd64 [installed,automatic]

rocm-ocl-icd/Ubuntu,now 2.0.0.50100-36 amd64 [installed,automatic]

rocm-opencl-dev/Ubuntu,now 2.0.0.50100-36 amd64 [installed,automatic]

rocm-opencl/Ubuntu,now 2.0.0.50100-36 amd64 [installed,automatic]

rocm-smi-lib/Ubuntu,now 5.0.0.50100-36 amd64 [installed,automatic]

rocm-utils/Ubuntu,now 5.1.0.50100-36 amd64 [installed,automatic]

rocminfo/Ubuntu,now 1.0.0.50100-36 amd64 [installed,automatic]

rocprofiler-dev/Ubuntu,now 1.0.0.50100-36 amd64 [installed,automatic

]

roctracer-dev/Ubuntu,now 1.0.0.50100-36 amd64 [installed,automatic]

EDIT:

Just ran the following and got a little more information about the current state of my system.

$ glxinfo -B

name of display: :0

display: :0 screen: 0

direct rendering: Yes

Extended renderer info (GLX_MESA_query_renderer):

Vendor: Microsoft Corporation (0xffffffff)

Device: D3D12 (AMD Radeon RX 6600 XT) (0xffffffff)

Version: 22.2.0

Accelerated: yes

Video memory: 24485MB

Unified memory: no

Preferred profile: core (0x1)

Max core profile version: 4.2

Max compat profile version: 4.2

Max GLES1 profile version: 1.1

Max GLES[23] profile version: 3.1

OpenGL vendor string: Microsoft Corporation

OpenGL renderer string: D3D12 (AMD Radeon RX 6600 XT)

OpenGL core profile version string: 4.2 (Core Profile) Mesa 22.2.0-devel (git-cbcdcc4 2022-06-11 focal-oibaf-ppa)

OpenGL core profile shading language version string: 4.20

OpenGL core profile context flags: (none)

OpenGL core profile profile mask: core profile

OpenGL version string: 4.2 (Compatibility Profile) Mesa 22.2.0-devel (git-cbcdcc4 2022-06-11 focal-oibaf-ppa)

OpenGL shading language version string: 4.20

OpenGL context flags: (none)

OpenGL profile mask: compatibility profile

OpenGL ES profile version string: OpenGL ES 3.1 Mesa 22.2.0-devel (git-cbcdcc4 2022-06-11 focal-oibaf-ppa)

OpenGL ES profile shading language version string: OpenGL ES GLSL ES 3.10

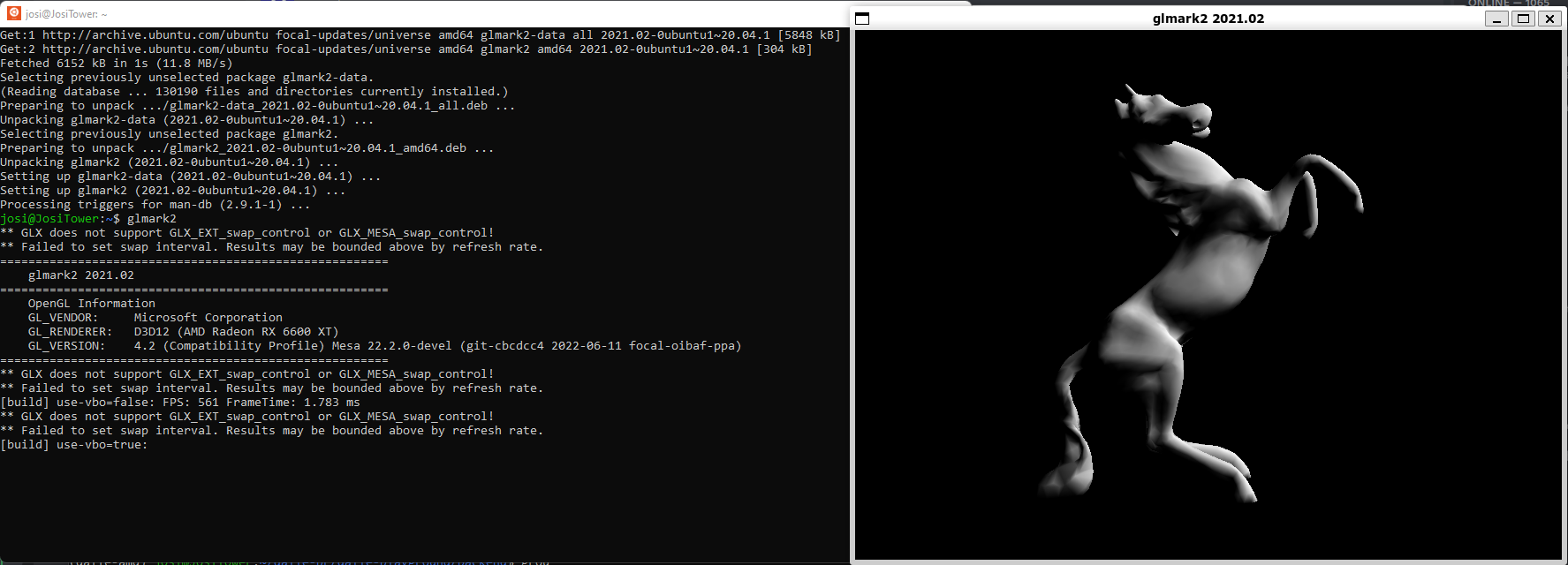

Adding to the confusion even more, glmark2 seems to be able to use my GPU fine, possibly an issue with the dalle program and not WSL?

Question posted in

Question posted in

2

Answers

Issue eventually came down to the fact that AMD GPUs don't work with CUDA, and the DALL-E Playground project only supports CUDA. Basically to run DALL-E Playground you must be using an Nvidia GPU. Alternatively you can run the project from your CPU.

I hope this covers any questions anyone may have.

Looks like this is a known bug at the moment with WSL / Torch:

https://github.com/pytorch/pytorch/issues/73487

From what I can see (and tested):

Seems to be all you can do. I would suggest reinstalling your WSL install and seeing if that helps then seting everything up again exactly as per the docs (as that is what Microsoft is most likelly to test). If this doesn’t help, my final suggestion would be to dual boot if that is an option for you.

(note that the nvidia does not apply to you in the quote, however cuda should still be available to you)